R Code for hypothesis testing

Distribution

Hide

Binomial

\(P(\mathcal{X}=k)\ = {n \choose k}p^k(1-p)^{n-k}\)

rbinom(5,10,0.3)

## [1] 2 5 6 4 3

qbinom(0.05,10,0.3)

## [1] 1

pbinom(1,10,0.3)

## [1] 0.1493083

dbinom(1,10,0.3)

## [1] 0.1210608Normal Distribution

\(P(\mathcal{X}=k)\ = \frac{1}{\sqrt{2\pi}\sigma}*e^{-\frac{(x-\mu)^2}{2\sigma^2}}\)

rnorm(5)

## [1] -0.4914706 1.8042584 -0.7052925 0.9350606 0.6894319

qnorm(0.05)

## [1] -1.644854

pnorm(1.96)

## [1] 0.9750021

dnorm(1.96)

## [1] 0.05844094Descriptive Statistics

hide

Table

################################################################

# Biostatistical Methods I #

# Descriptive Statistics #

# Author: Cody Chiuzan #

################################################################

# Library 'arsenal' is used for descriptive statistics tables

# Library 'dplyr' has nice functions for data manipulation, also mutate()

# Library 'ggplot2' is used for graphing

library(arsenal)

library(dplyr)

library(ggplot2)

#########################################################################

# Import Data #

#########################################################################

# Set working directory

low_birth_all <- read.csv(here::here("R_Code/R - Module 2/lowbwt_ALL.csv"))

names(low_birth_all)## [1] "low" "age" "lwt" "race" "smoke" "ht" "ui" "ftv" "ptl"

## [10] "bwt"head(low_birth_all)## low age lwt race smoke ht ui ftv ptl bwt

## 1 0 19 182 black 0 0 1 0 0 2523

## 2 0 33 155 other 0 0 0 1 0 2551

## 3 0 20 105 white 1 0 0 1 0 2557

## 4 0 21 108 white 1 0 1 1 0 2594

## 5 0 18 107 white 1 0 1 0 0 2600

## 6 0 21 124 other 0 0 0 0 0 2622dim(low_birth_all)## [1] 189 10summary(low_birth_all)## low age lwt race

## Min. :0.0000 Min. :14.00 Min. : 80.0 Length:189

## 1st Qu.:0.0000 1st Qu.:19.00 1st Qu.:110.0 Class :character

## Median :0.0000 Median :23.00 Median :121.0 Mode :character

## Mean :0.3122 Mean :23.24 Mean :129.7

## 3rd Qu.:1.0000 3rd Qu.:26.00 3rd Qu.:140.0

## Max. :1.0000 Max. :45.00 Max. :250.0

## smoke ht ui ftv

## Min. :0.0000 Min. :0.00000 Min. :0.0000 Min. :0.0000

## 1st Qu.:0.0000 1st Qu.:0.00000 1st Qu.:0.0000 1st Qu.:0.0000

## Median :0.0000 Median :0.00000 Median :0.0000 Median :0.0000

## Mean :0.3915 Mean :0.06349 Mean :0.1481 Mean :0.4709

## 3rd Qu.:1.0000 3rd Qu.:0.00000 3rd Qu.:0.0000 3rd Qu.:1.0000

## Max. :1.0000 Max. :1.00000 Max. :1.0000 Max. :1.0000

## ptl bwt

## Min. :0.0000 Min. : 709

## 1st Qu.:0.0000 1st Qu.:2414

## Median :0.0000 Median :2977

## Mean :0.1587 Mean :2945

## 3rd Qu.:0.0000 3rd Qu.:3475

## Max. :1.0000 Max. :4990str(low_birth_all)## 'data.frame': 189 obs. of 10 variables:

## $ low : int 0 0 0 0 0 0 0 0 0 0 ...

## $ age : int 19 33 20 21 18 21 22 17 29 26 ...

## $ lwt : int 182 155 105 108 107 124 118 103 123 113 ...

## $ race : chr "black" "other" "white" "white" ...

## $ smoke: int 0 0 1 1 1 0 0 0 1 1 ...

## $ ht : int 0 0 0 0 0 0 0 0 0 0 ...

## $ ui : int 1 0 0 1 1 0 0 0 0 0 ...

## $ ftv : int 0 1 1 1 0 0 1 1 1 0 ...

## $ ptl : int 0 0 0 0 0 0 0 0 0 0 ...

## $ bwt : int 2523 2551 2557 2594 2600 2622 2637 2637 2663 2665 ...# Check for missing values

anyNA(low_birth_all)## [1] FALSEfilter(low_birth_all, is.na(age))## [1] low age lwt race smoke ht ui ftv ptl bwt

## <0 rows> (or 0-length row.names)# Some details about the data: 189 births info were collected at a medical center.

# The dataset contains the following 10 variables:

# low: indicator of birth weight less than 2.5kg

# age: mother's age in years

# lwt: mother's weight in pounds at last menstrual period

# race: mothers race ("white", "black", "other")

# smoke: smoking status during pregnancy (yes/no)

# ht: history of hypertension (yes/no)

# ui: presence of uterine irritability (yes/no)

# ftv: physician visit during the first trimester (yes/no)

# ptl: previous premature labor (yes/no)

# bwt: birth weight in grams

#########################################################################

# Descriptive Statistics: Continuous Variables #

#########################################################################

mean(low_birth_all$age) # Mean## [1] 23.2381median(low_birth_all$age) # Median## [1] 23sd(low_birth_all$age) # Standard Deviation## [1] 5.298678quantile(low_birth_all$age) # Min, 25ht, 50th, 75th, Max## 0% 25% 50% 75% 100%

## 14 19 23 26 45quantile(low_birth_all$age, c(0.10, 0.30, 0.60)) # Tertiles## 10% 30% 60%

## 17 20 24# A more condensed way to obtain summary statistics

summary(low_birth_all$age)## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 14.00 19.00 23.00 23.24 26.00 45.00# Summary statistics for each level of another categorical variable

mean <- tapply(low_birth_all$bwt, low_birth_all$race, mean)

sd <- tapply(low_birth_all$bwt, low_birth_all$race, sd)

med <- tapply(low_birth_all$bwt, low_birth_all$race, median)

min <- tapply(low_birth_all$bwt, low_birth_all$race, min)

max <- tapply(low_birth_all$bwt, low_birth_all$race, max)

cbind(mean, sd, med, min, max)## mean sd med min max

## black 2719.692 638.6839 2849 1135 3860

## other 2804.015 721.3011 2835 709 4054

## white 3103.740 727.7242 3076 1021 4990# Use function tableby() from library 'arsenal' to create a summary table (called Table 1 in publications)

# Use continuous and categorical variables

# First table - not ideal

tab1 <- tableby(~ age + bwt + smoke, data = low_birth_all)

summary(tab1)##

##

## | | Overall (N=189) |

## |:---------------------------|:------------------:|

## |**age** | |

## | Mean (SD) | 23.238 (5.299) |

## | Range | 14.000 - 45.000 |

## |**bwt** | |

## | Mean (SD) | 2944.656 (729.022) |

## | Range | 709.000 - 4990.000 |

## |**smoke** | |

## | Mean (SD) | 0.392 (0.489) |

## | Range | 0.000 - 1.000 |# Change variable names/labels

my_labels <-

list(

age = "Age(yrs)",

bwt = "Birthweight(g)",

smoke = "Smoker",

race = "Race"

)

# Clean the output

my_controls <- tableby.control(

total = T,

test = F,

# No test p-values yet

numeric.stats = c("meansd", "medianq1q3", "range", "Nmiss2"),

cat.stats = c("countpct", "Nmiss2"),

stats.labels = list(

meansd = "Mean (SD)",

medianq1q3 = "Median (Q1, Q3)",

range = "Min - Max",

Nmiss2 = "Missing",

countpct = "N (%)"

)

)

# Make 'smoke' a factor to show N (%)

birth_df <- low_birth_all %>%

mutate(smoke = factor(smoke, labels = c("No", "Yes"))) # Start labeling with 0 (increasing order)

# Second table

tab2 <-

tableby(~ age + bwt + smoke, data = birth_df, control = my_controls)

summary(tab2,

title = "Descriptive Statistics: Lowbirth Data",

labelTranslations = my_labels,

text = T)##

## Table: Descriptive Statistics: Lowbirth Data

##

## | | Overall (N=189) |

## |:------------------|:-----------------------------:|

## |Age(yrs) | |

## |- Mean (SD) | 23.238 (5.299) |

## |- Median (Q1, Q3) | 23.000 (19.000, 26.000) |

## |- Min - Max | 14.000 - 45.000 |

## |- Missing | 0 |

## |Birthweight(g) | |

## |- Mean (SD) | 2944.656 (729.022) |

## |- Median (Q1, Q3) | 2977.000 (2414.000, 3475.000) |

## |- Min - Max | 709.000 - 4990.000 |

## |- Missing | 0 |

## |Smoker | |

## |- No | 115 (60.8%) |

## |- Yes | 74 (39.2%) |

## |- Missing | 0 |# Tabulation by race categories

tab3 <-

tableby(race ~ age + bwt + smoke, data = birth_df, control = my_controls)

summary(tab3,

title = "Descriptive Statistics: Lowbirth Data",

labelTranslations = my_labels,

text = T)##

## Table: Descriptive Statistics: Lowbirth Data

##

## | | black (N=26) | other (N=67) | white (N=96) | Total (N=189) |

## |:------------------|:-----------------------------:|:-----------------------------:|:-----------------------------:|:-----------------------------:|

## |Age(yrs) | | | | |

## |- Mean (SD) | 21.538 (5.109) | 22.388 (4.536) | 24.292 (5.655) | 23.238 (5.299) |

## |- Median (Q1, Q3) | 20.500 (17.250, 24.000) | 22.000 (19.000, 25.000) | 23.500 (20.000, 29.000) | 23.000 (19.000, 26.000) |

## |- Min - Max | 15.000 - 35.000 | 14.000 - 33.000 | 14.000 - 45.000 | 14.000 - 45.000 |

## |- Missing | 0 | 0 | 0 | 0 |

## |Birthweight(g) | | | | |

## |- Mean (SD) | 2719.692 (638.684) | 2804.015 (721.301) | 3103.740 (727.724) | 2944.656 (729.022) |

## |- Median (Q1, Q3) | 2849.000 (2370.500, 3057.000) | 2835.000 (2313.000, 3274.000) | 3076.000 (2584.750, 3651.000) | 2977.000 (2414.000, 3475.000) |

## |- Min - Max | 1135.000 - 3860.000 | 709.000 - 4054.000 | 1021.000 - 4990.000 | 709.000 - 4990.000 |

## |- Missing | 0 | 0 | 0 | 0 |

## |Smoker | | | | |

## |- No | 16 (61.5%) | 55 (82.1%) | 44 (45.8%) | 115 (60.8%) |

## |- Yes | 10 (38.5%) | 12 (17.9%) | 52 (54.2%) | 74 (39.2%) |

## |- Missing | 0 | 0 | 0 | 0 |#########################################################################

# Descriptive Statistics: Categorical Variables #

#########################################################################

tbl <-

table(low_birth_all$smoke, low_birth_all$race) # Two-way table

tbl##

## black other white

## 0 16 55 44

## 1 10 12 52prop.table(tbl, 1) # Row proportions##

## black other white

## 0 0.1391304 0.4782609 0.3826087

## 1 0.1351351 0.1621622 0.7027027prop.table(tbl, 2) # Column proportions##

## black other white

## 0 0.6153846 0.8208955 0.4583333

## 1 0.3846154 0.1791045 0.5416667# 3-way cross-tabulation

xtabs( ~ race + smoke + ht, data = low_birth_all)## , , ht = 0

##

## smoke

## race 0 1

## black 14 9

## other 51 12

## white 43 48

##

## , , ht = 1

##

## smoke

## race 0 1

## black 2 1

## other 4 0

## white 1 4T-Test

hide

One Group

################################################################

# Biostatistical Methods I #

# Statistical Inference: One-Sample Mean #

# Author: Cody Chiuzan #

################################################################

############################################################

# Sample mean distributions: CLT #

############################################################

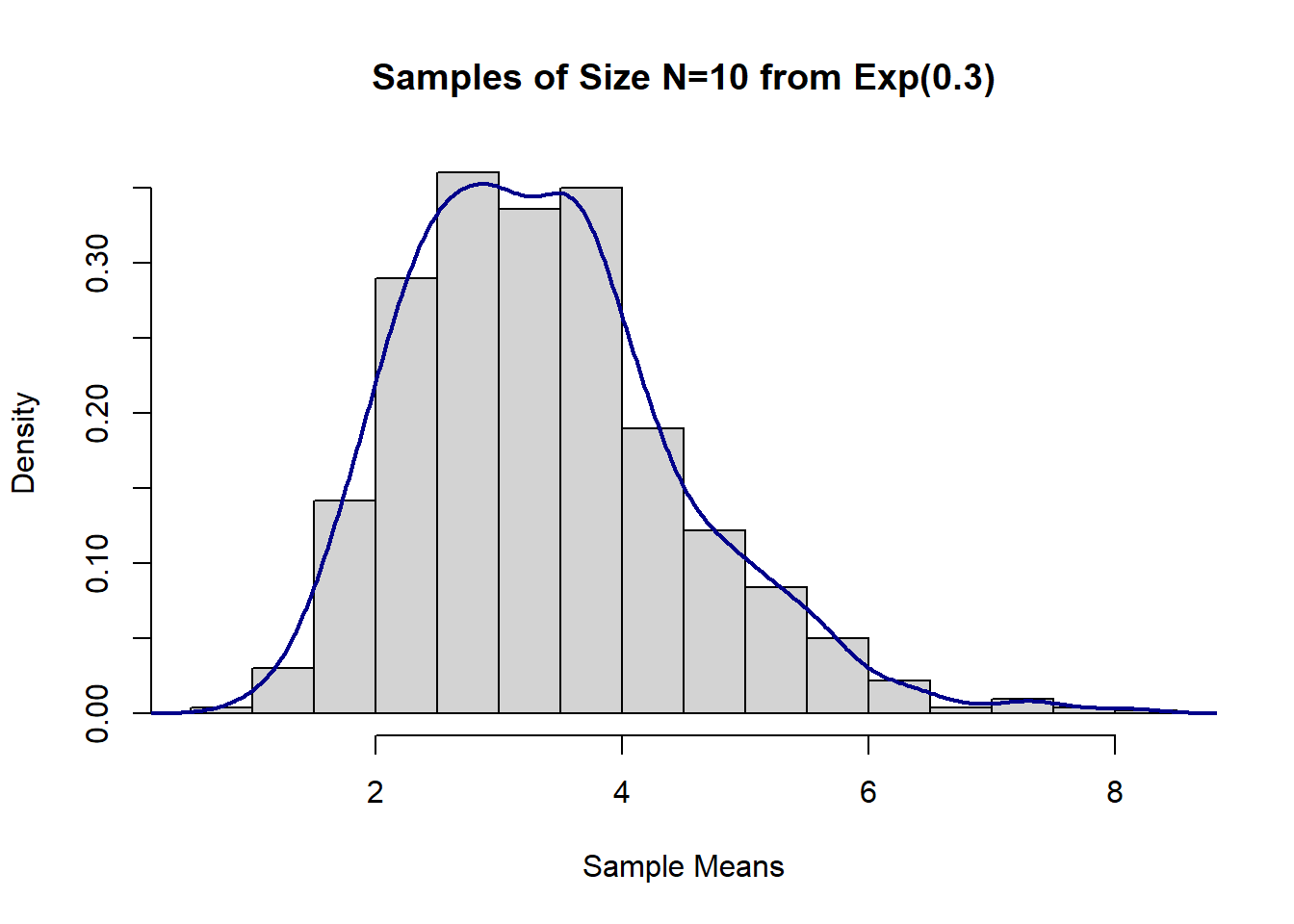

# Draw 1000 samples of size 10 from an underlying exponential distribution with parameter lambda=0.3

# Calculate their means/var and draw a histogram to vizualize the sample means distribution

set.seed(2)

sample_means_exp1 = rep(NA, 1000)

for (i in 1:1000) {

sample_means_exp1[i] = mean(rexp(10, 0.3))

}

# sample_means_exp

# Calculate the means and the variances of all samples

mean(sample_means_exp1) # compare to true Mean = 1/lambda## [1] 3.360129var(sample_means_exp1) # compare to true Var=1/lambda^2## [1] 1.261384#Histogram

hist(sample_means_exp1,

main = "Samples of Size N=10 from Exp(0.3)",

xlab = "Sample Means",

prob = T)

lines(density(sample_means_exp1), col = "darkblue", lwd = 2)

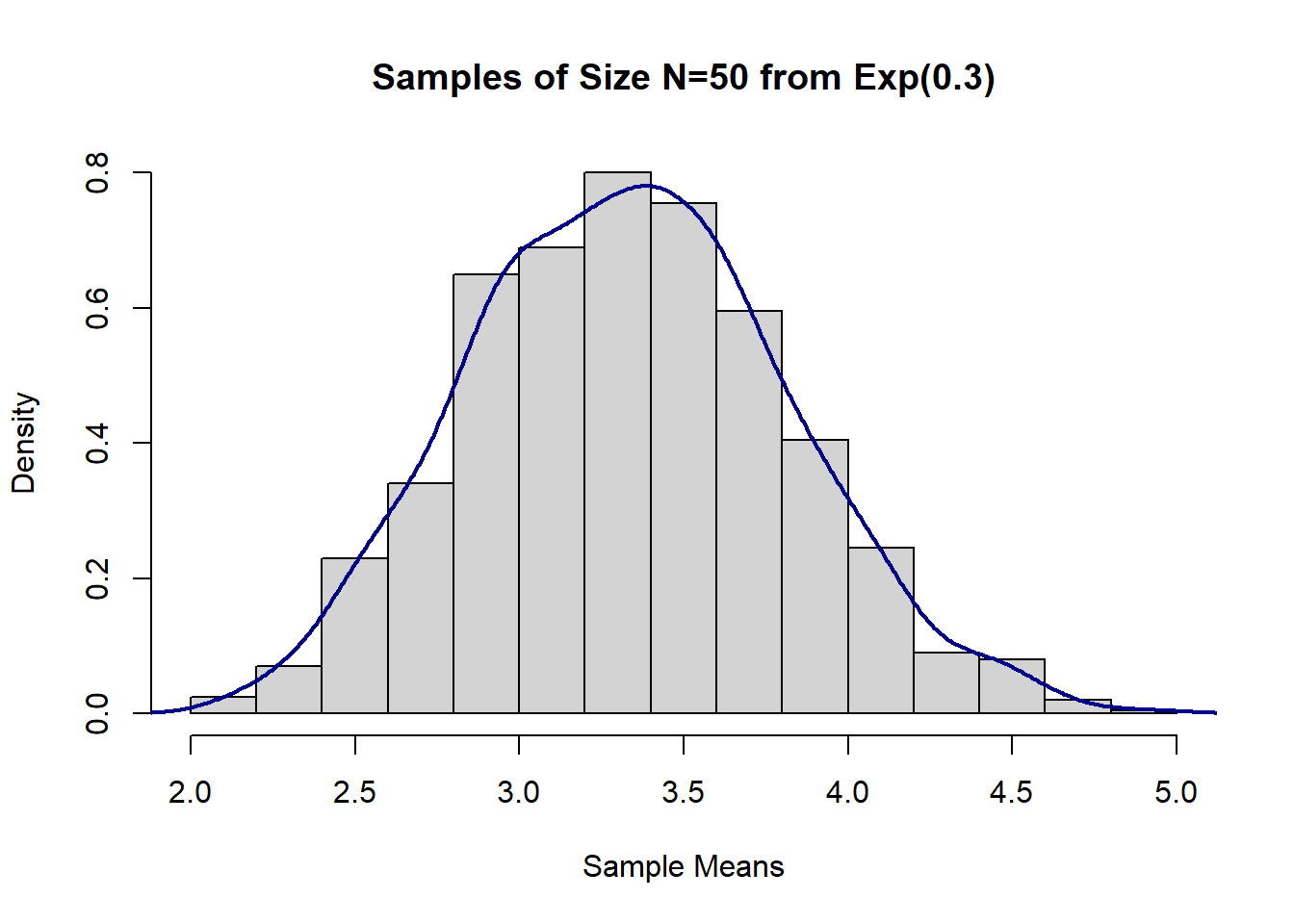

# Draw 1000 samples of size 50 from an underlying exponential distribution with parameter lambda=0.3

# Calculate their means/var and draw a histogram to vizualize the sample means distribution

set.seed(2)

sample_means_exp2 = rep(NA, 1000)

for (i in 1:1000) {

sample_means_exp2[i] = mean(rexp(50, 0.3))

}

# Calculate the means and the variances of all samples

mean(sample_means_exp2) # compare to true Mean = 1/lambda## [1] 3.330665var(sample_means_exp2) # compare to true Var=1/lambda^2## [1] 0.2316242#Histogram

hist(sample_means_exp2,

main = "Samples of Size N=50 from Exp(0.3)",

xlab = "Sample Means",

prob = T)

lines(density(sample_means_exp2), col = "darkblue", lwd = 2)

# Construct a 95% CI for the population mean with n=10, X_bar=175, and known sigma=15

# Sigma represents the pooulation standard deviation

# 1-(alpha/2)=1-(0.05/2)=0.975

LCLz95 <- 175 - qnorm(0.975) * 15 / sqrt(10)

UCLz95 <- 175 + qnorm(0.975) * 15 / sqrt(10)

CLz95 <- c(LCLz95, UCLz95)

CLz95## [1] 165.7031 184.2969# What if we want a 99% CI?

LCLz99 <- 175 - qnorm(0.995) * 15 / sqrt(10)

UCLz99 <- 175 + qnorm(0.995) * 15 / sqrt(10)

CLz99 <- c(LCLz99, UCLz99)

CLz99## [1] 162.7818 187.2182# Construct a 95% CI for the population mean with n=10 => df=10-1=9, X_bar=175, and known s=15

# s represents the sample standard deviation

LCLt95 <- 175 - qt(0.975, df = 9) * 15 / sqrt(10)

UCLt95 <- 175 + qt(0.975, df = 9) * 15 / sqrt(10)

CLt95 <- c(LCLt95, UCLt95)

CLt95## [1] 164.2696 185.7304# Construct a 95% CI for the population variance with known s=15

# s represents the sample standard deviation

LCL_var95 <- 9 * (15 ^ 2) / qchisq(0.975, 9)

UCL_var95 <- 9 * (15 ^ 2) / qchisq(0.025, 9)

CL_var95 <- c(LCL_var95, UCL_var95)

CL_var95## [1] 106.4514 749.8918# Hypothesis Test: Infarct size example

# Test if the mean infract size is different from 25

# X_bar=16, s=10, N=40

t_stats <- (16 - 25) / (10 / sqrt(40))

t_stats## [1] -5.6921# Compare the test statistics with the critical value, alpha=0.05

qt(0.975, 39) # 2.02## [1] 2.022691# Compute the p-value: t_stats<0, so the p-value is twice area to the left of a t distr. with 39 df

p.val <- 2 * pt(t_stats, 39) # p.val<.0001, reject H0.

# Remember the low_birth data

low_birth_all <-

read.csv(here::here("R_Code/R - Module 2/lowbwt_ALL.csv"))

# Let's test if the true mean is different than 3000g

# One-sample t-test, two-tailed

t.test(low_birth_all$bwt, alternative = 'two.sided', mu = 3000)##

## One Sample t-test

##

## data: low_birth_all$bwt

## t = -1.0437, df = 188, p-value = 0.298

## alternative hypothesis: true mean is not equal to 3000

## 95 percent confidence interval:

## 2840.049 3049.264

## sample estimates:

## mean of x

## 2944.656# Output from R

# One Sample t-test

# t = -1.0437, df = 188, p-value = 0.298 ----> Fail to reject H0.

# alternative hypothesis: true mean is not equal to 3000

# 95 percent confidence interval: 2840.049 3049.264

# sample estimates: mean of x is 2944.656

# Let's test if the true mean is less than 3000g

# One-sample t-test, one-tailed

t.test(low_birth_all$bwt, alternative = 'less', mu = 3000)##

## One Sample t-test

##

## data: low_birth_all$bwt

## t = -1.0437, df = 188, p-value = 0.149

## alternative hypothesis: true mean is less than 3000

## 95 percent confidence interval:

## -Inf 3032.312

## sample estimates:

## mean of x

## 2944.656# Output from R

# t = -1.0437, df = 188, p-value = 0.149 ----> Fail to reject H0.

# alternative hypothesis: true mean is less than 3000

# 95 percent confidence interval: -Inf 3032.312 (One-sided confidence interval)

# sample estimates: mean of x is 2944.656 Two Group

################################################################

# Biostatistical Methods I #

# Statistical Inference: Two-Sample Means #

# Author: Cody Chiuzan; Date: Sept 23, 2019 #

################################################################

###########################################################################

# Conduct a two-sample paired t-test to assess the effect of a new diet #

###########################################################################

weight_before <- c(201, 231, 221, 260, 228, 237, 326, 235, 240, 267, 284, 201)

weight_after <- c(200, 236, 216, 233, 224, 216, 296, 195, 207, 247, 210, 209)

weight_diff <- weight_after - weight_before

sd_diff <- sd(weight_diff)

test_weight <- mean(weight_diff) / (sd_diff / sqrt(length(weight_diff)))

# Use the t.test() built-in function

# What alternative are you testing?

t.test(weight_after,

weight_before,

paired = T,

alternative = "less")##

## Paired t-test

##

## data: weight_after and weight_before

## t = -3.0201, df = 11, p-value = 0.005827

## alternative hypothesis: true difference in means is less than 0

## 95 percent confidence interval:

## -Inf -8.174729

## sample estimates:

## mean of the differences

## -20.16667# R output

# data: weight_after and weight_before

# t = -3.0201, df = 11, p-value = 0.005827

# alternative hypothesis: true difference in means is less than 0

# 95 percent confidence interval: -Inf -8.174729

# sample estimates: mean of the differences -20.16667

# Reject the null and conclude that the mean LDL levels are significantly lower after the diet.

###########################################################################################################

# Conduct a two-sample independent t-test to assess the differences in BMD b/w the OC and non-OC groups #

###########################################################################################################

# Oral contraceptive example

# Testing equality of variances for two independent samples

# drawn from two underlying normal distributions.

# Sample 1: s1=0.16, n1=10, x1_bar=1.08

# Sample 2: s2=0.14, n2=10, x2_bar=1.00

F_test <- 0.16 ^ 2 / 0.14 ^ 2

F_crit <- qf(.975, df1 = 9, df2 = 9)

# Compare the F statistic (F_test) to the critical value

# Fcrit: F with 9 dfs in numerator and 9 dfs in denominator

# Because F_test < F_crit, we fail to reject and conclude

# that the pop. variances are not significantly different.

# Use two-sample t-test with equal variances.

std_pooled <- sqrt(((0.16 ^ 2 * 9) + (0.14 ^ 2 * 9)) / 18)

t_stats <- (1.08 - 1.00) / (std_pooled * sqrt((1 / 10) + (1 / 10)))

# Compare t_stats to the critical value: t with 18 df

qt(0.975, 18) # 2.10## [1] 2.100922# t-stats=1.19 < 2.10, fail to reject the null and conclude that

# there is not a sig difference between the mean BMD levels of the two groups.

# 95% CI is your practice!

################################################################

# Two-Sample independent t-test #

################################################################

# Effect of caffeine on muscle metabolism.

# 15 men were randomly selected to take a capsule containing pure caffeine one hour before the test.

# The other group of 20 men received a placebo capsule.

# During each exercise the subject's respiratory exchange ratio (RER) was measured.

# The question of interest to the experimenter was whether, on average, caffeine consumption has an effect on RER.

# The two samples came from two underlying normal distributions: N(94.2,5.6), N(105.5,8.1) and are independent.

# Ideally, you should generate data using past info (here I made it up).

set.seed(6)

caff <- rnorm(15, 94.2, 5.6)

placebo <- rnorm(20, 105.5, 8.1)

# Test equality of variances: use R function var.test()

var.test(placebo, caff, alternative = "two.sided")##

## F test to compare two variances

##

## data: placebo and caff

## F = 3.5768, num df = 19, denom df = 14, p-value = 0.01881

## alternative hypothesis: true ratio of variances is not equal to 1

## 95 percent confidence interval:

## 1.250308 9.467490

## sample estimates:

## ratio of variances

## 3.576784#F = 3.5768, num df = 19, denom df = 14, p-value = 0.01881 # Reject the null, evidence that variances are not equal.

res <-

t.test(caff, placebo, var.equal = FALSE, paired = FALSE) # var.equal=FALSE is the default, so no need to specifically write it.

res##

## Welch Two Sample t-test

##

## data: caff and placebo

## t = -4.5834, df = 30.125, p-value = 7.472e-05

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -17.639668 -6.766628

## sample estimates:

## mean of x mean of y

## 94.68082 106.88397# Look at the complete list of results.

names(res)## [1] "statistic" "parameter" "p.value" "conf.int" "estimate"

## [6] "null.value" "stderr" "alternative" "method" "data.name"# t = -4.5834, df = 30.125, p-value = 7.472e-05 # Reject the null and conclude that the means RER are sig diff b/w the two groups.

# 95% CI of the difference: (-17.639668,-6.766628) # We could safely say that the mean RER is sig. lower in the caffeine group (Why?)Multigroup Camparison

hide

ANOVA

################################################################

# Biostatistical Methods I #

# One-Way Analysis of Variance (ANOVA) #

# Author: Cody Chiuzan #

################################################################

################################################################

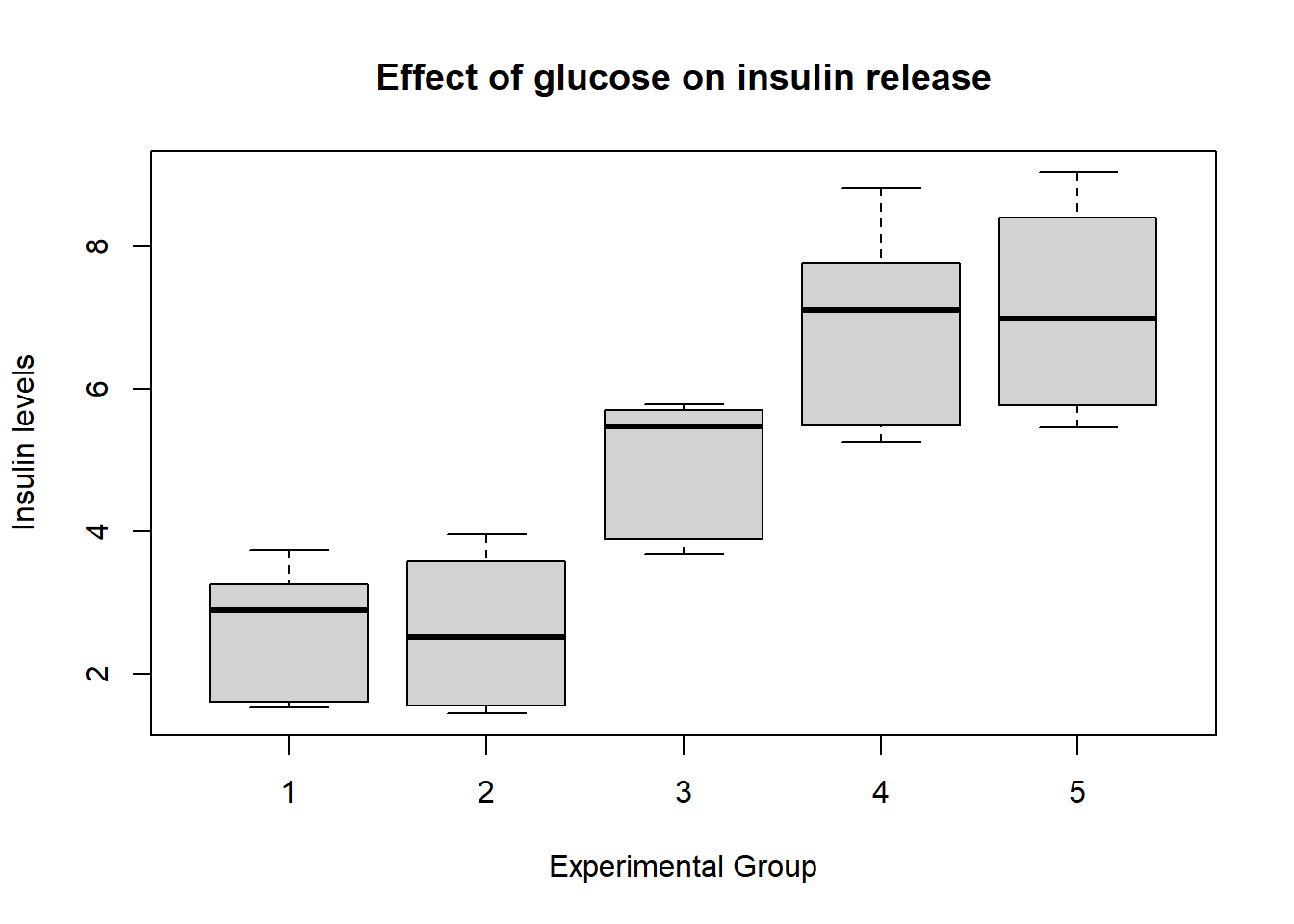

# A study is examining the effect of glucose on insulin release.

# Specimens of pancreatic tissue from experimental animals were

# treated with five different stimulants and the insulin levels were recorded.

# Use an ANOVA test to compare the mean insulin levels across the five groups.

################################################################

ins1 <- c(1.53, 1.61, 3.75, 2.89, 3.26)

ins2 <- c(3.15, 3.96, 3.59, 1.89, 1.45, 1.56)

ins3 <- c(3.89, 3.68, 5.70, 5.62, 5.79, 5.33)

ins4 <- c(8.18, 5.64, 7.36, 5.33, 8.82, 5.26, 7.10)

ins5 <- c(5.86, 5.46, 5.69, 6.49, 7.81, 9.03, 7.49, 8.98)

# Re-shape the data

insulin <- c(ins1, ins2, ins3, ins4, ins5)

ind <-

c(rep(1, length(ins1)),

rep(2, length(ins2)),

rep(3, length(ins3)),

rep(4, length(ins4)),

rep(5, length(ins5)))

new_data <- as.data.frame(cbind(insulin, ind))

head(new_data)## insulin ind

## 1 1.53 1

## 2 1.61 1

## 3 3.75 1

## 4 2.89 1

## 5 3.26 1

## 6 3.15 2# Summarize the data

tmp_functn <-

function(x)

c(

sum = sum(x),

mean = mean(x),

var = var(x),

n = length(x)

)

tapply(insulin, ind, tmp_functn)## $`1`

## sum mean var n

## 13.04000 2.60800 0.99172 5.00000

##

## $`2`

## sum mean var n

## 15.60000 2.60000 1.20808 6.00000

##

## $`3`

## sum mean var n

## 30.0100000 5.0016667 0.9163767 6.0000000

##

## $`4`

## sum mean var n

## 47.690000 6.812857 2.044224 7.000000

##

## $`5`

## sum mean var n

## 56.810000 7.101250 2.071841 8.000000# Create box-plots

boxplot(

insulin ~ ind,

data = new_data,

main = "Effect of glucose on insulin release",

xlab = "Experimental Group",

ylab = "Insulin levels"

)

# Perform an ANOVA test: are the mean insulin levels significantly different?

# Need to mention the independent variable as a factor; o/w will be considered continuous

# Function lm() is broader, including linear regression models

res <- lm(insulin ~ factor(ind), data = new_data)

# Coefficients of the ANOVA model with 'grand mean' and alpha effects.

# Will use them later in regression.

res##

## Call:

## lm(formula = insulin ~ factor(ind), data = new_data)

##

## Coefficients:

## (Intercept) factor(ind)2 factor(ind)3 factor(ind)4 factor(ind)5

## 2.608 -0.008 2.394 4.205 4.493# Our regular ANOVA table with SS, Mean SS and F-test

anova(res)## Analysis of Variance Table

##

## Response: insulin

## Df Sum Sq Mean Sq F value Pr(>F)

## factor(ind) 4 121.185 30.2964 19.779 1.046e-07 ***

## Residuals 27 41.357 1.5318

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1# Another option using aov();

# Save the anova object to use later for multiple comparisons

res1 <- aov(insulin ~ factor(ind), data = new_data)

summary(res1)## Df Sum Sq Mean Sq F value Pr(>F)

## factor(ind) 4 121.19 30.296 19.78 1.05e-07 ***

## Residuals 27 41.36 1.532

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Between Groups Comparison

library(multcomp)# Multiple comparisons adjustments: includes Bonferroni, Holm, Benjamini-Hochberg

pairwise.t.test(new_data$insulin, new_data$ind, p.adj = 'bonferroni')##

## Pairwise comparisons using t tests with pooled SD

##

## data: new_data$insulin and new_data$ind

##

## 1 2 3 4

## 2 1.000 - - -

## 3 0.036 0.023 - -

## 4 3.6e-05 1.5e-05 0.139 -

## 5 8.1e-06 3.1e-06 0.041 1.000

##

## P value adjustment method: bonferroni# For Tukey, we need to use another function with an object created by aov()

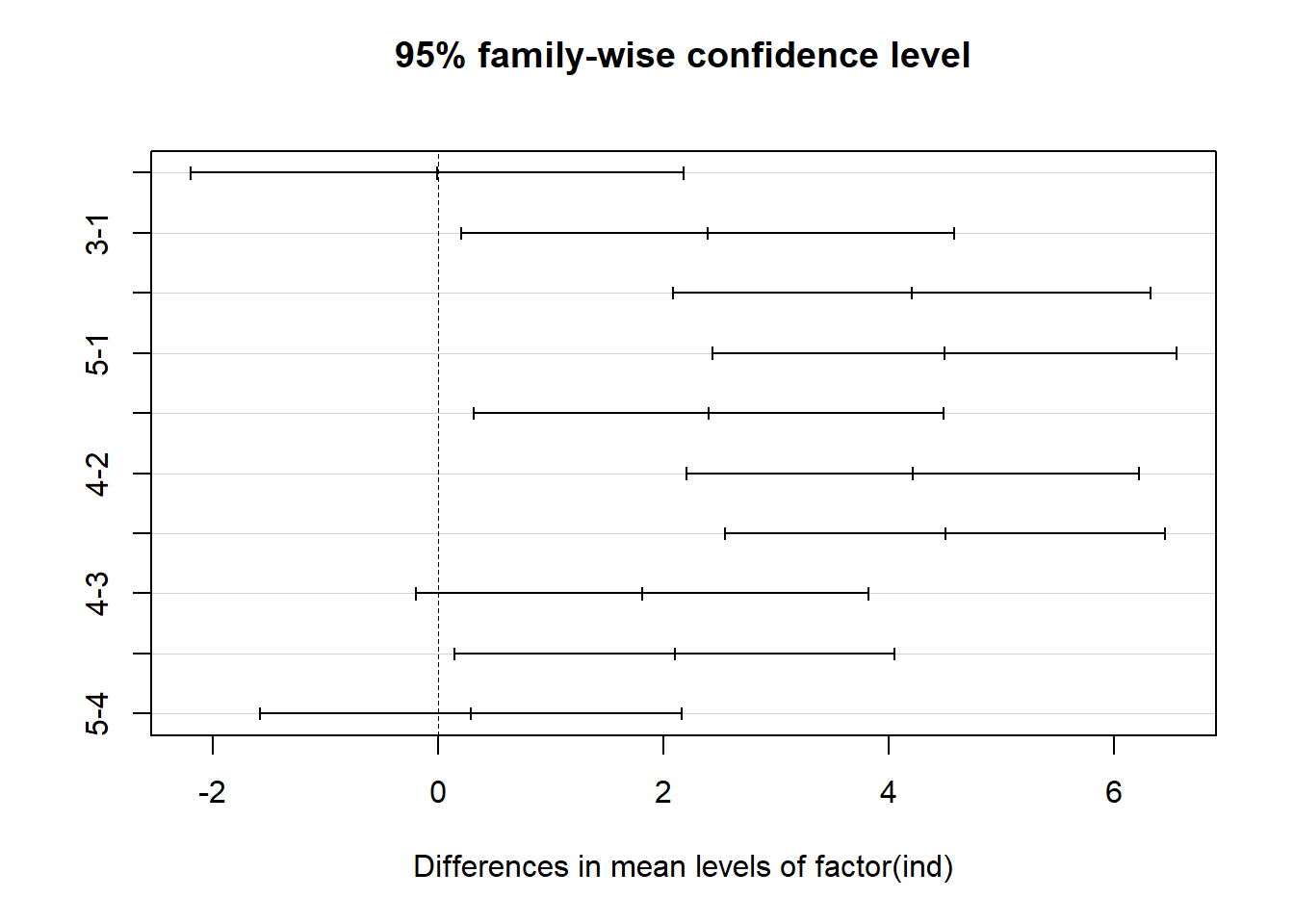

Tukey_comp <- TukeyHSD(res1)

Tukey_comp## Tukey multiple comparisons of means

## 95% family-wise confidence level

##

## Fit: aov(formula = insulin ~ factor(ind), data = new_data)

##

## $`factor(ind)`

## diff lwr upr p adj

## 2-1 -0.0080000 -2.1968435 2.180844 1.0000000

## 3-1 2.3936667 0.2048232 4.582510 0.0269106

## 4-1 4.2048571 2.0882727 6.321442 0.0000330

## 5-1 4.4932500 2.4325220 6.553978 0.0000076

## 3-2 2.4016667 0.3146863 4.488647 0.0181513

## 4-2 4.2128571 2.2017925 6.223922 0.0000145

## 5-2 4.5012500 2.5490586 6.453441 0.0000030

## 4-3 1.8111905 -0.1998742 3.822255 0.0927137

## 5-3 2.0995833 0.1473919 4.051775 0.0304080

## 5-4 0.2883929 -1.5824211 2.159207 0.9910177plot(Tukey_comp)

# Dunnett's test: multiple comparisons with a specified control (here group #1)

summary(glht(res1), linfct = mcp(Group = "Dunnett"))## Warning in chkdots(...): Argument(s) 'linfct' passed to '...' are ignored##

## Simultaneous Tests for General Linear Hypotheses

##

## Fit: aov(formula = insulin ~ factor(ind), data = new_data)

##

## Linear Hypotheses:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) == 0 2.6080 0.5535 4.712 <0.001 ***

## factor(ind)2 == 0 -0.0080 0.7494 -0.011 1.000

## factor(ind)3 == 0 2.3937 0.7494 3.194 0.013 *

## factor(ind)4 == 0 4.2049 0.7247 5.802 <0.001 ***

## factor(ind)5 == 0 4.4932 0.7056 6.368 <0.001 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

## (Adjusted p values reported -- single-step method)Proportion

hide

Normal Approximate Binomial

################################################################

# Biostatistical Methods I #

# Inferences for One-Sample Proportions #

# Author: Cody Chiuzan #

################################################################

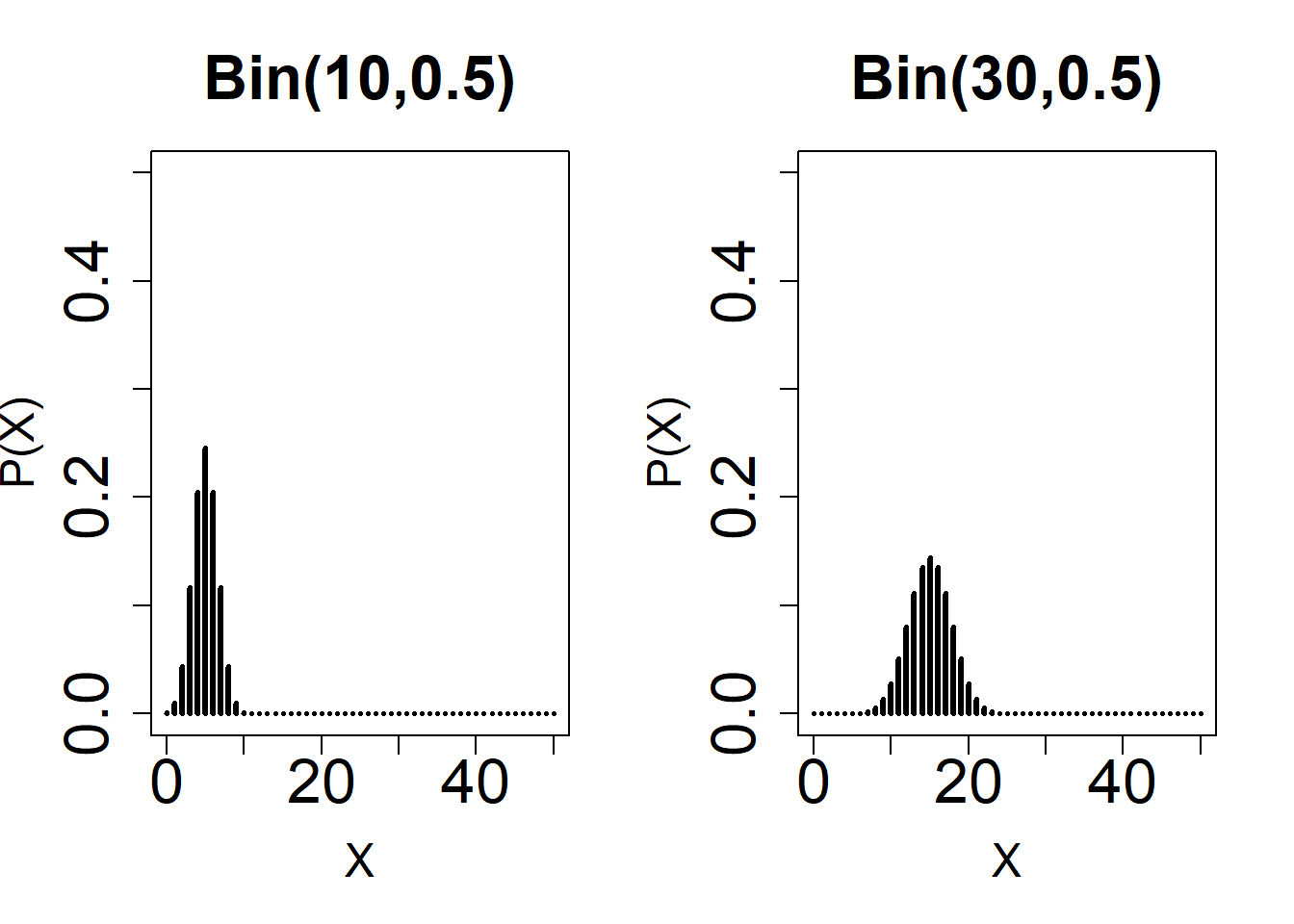

# Normal Approximation: Observe the shape of different Binomial distributions with varying n and p.

#1

par(mfrow = c(1, 2))

plot(

0:50,

dbinom(0:50, 10, 0.5),

type = 'h',

ylim = c(0, 0.50),

xlab = 'X',

main = 'Bin(10,0.5)',

ylab = 'P(X)',

lwd = 3,

cex.lab = 1.5,

cex.axis = 2,

cex.main = 2

)

plot(

0:50,

dbinom(0:50, 30, 0.5),

type = 'h',

ylim = c(0, 0.50),

xlab = 'X',

main = 'Bin(30,0.5)',

ylab = 'P(X)',

lwd = 3,

cex.lab = 1.5,

cex.axis = 2,

cex.main = 2

)

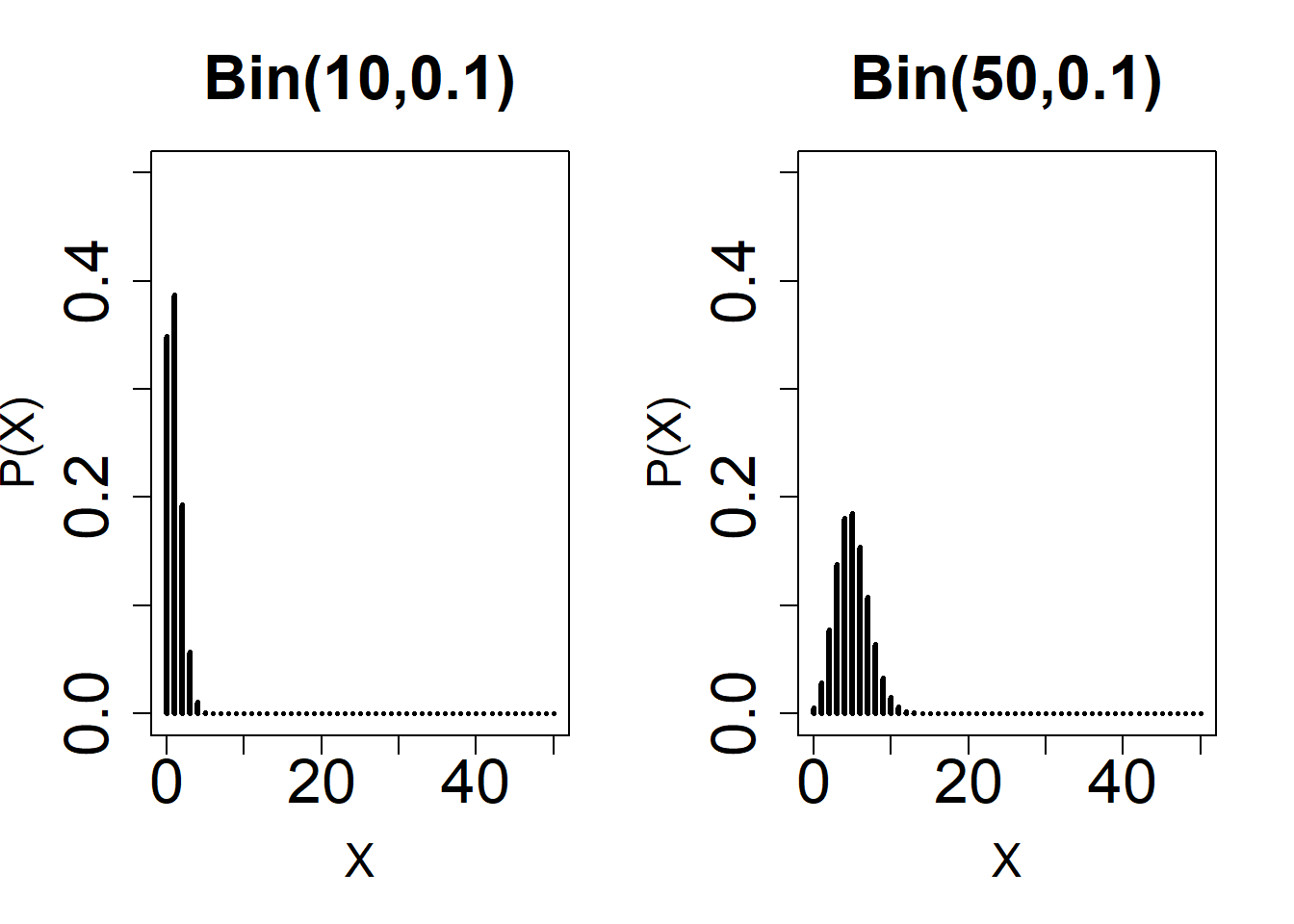

#2

par(mfrow = c(1, 2))

plot(

0:50,

dbinom(0:50, 10, 0.10),

type = 'h',

ylim = c(0, 0.50),

xlab = 'X',

main = 'Bin(10,0.1)',

ylab = 'P(X)',

lwd = 3,

cex.lab = 1.5,

cex.axis = 2,

cex.main = 2

)

plot(

0:50,

dbinom(0:50, 50, 0.10),

type = 'h',

ylim = c(0, 0.50),

xlab = 'X',

main = 'Bin(50,0.1)',

ylab = 'P(X)',

lwd = 3,

cex.lab = 1.5,

cex.axis = 2,

cex.main = 2

)

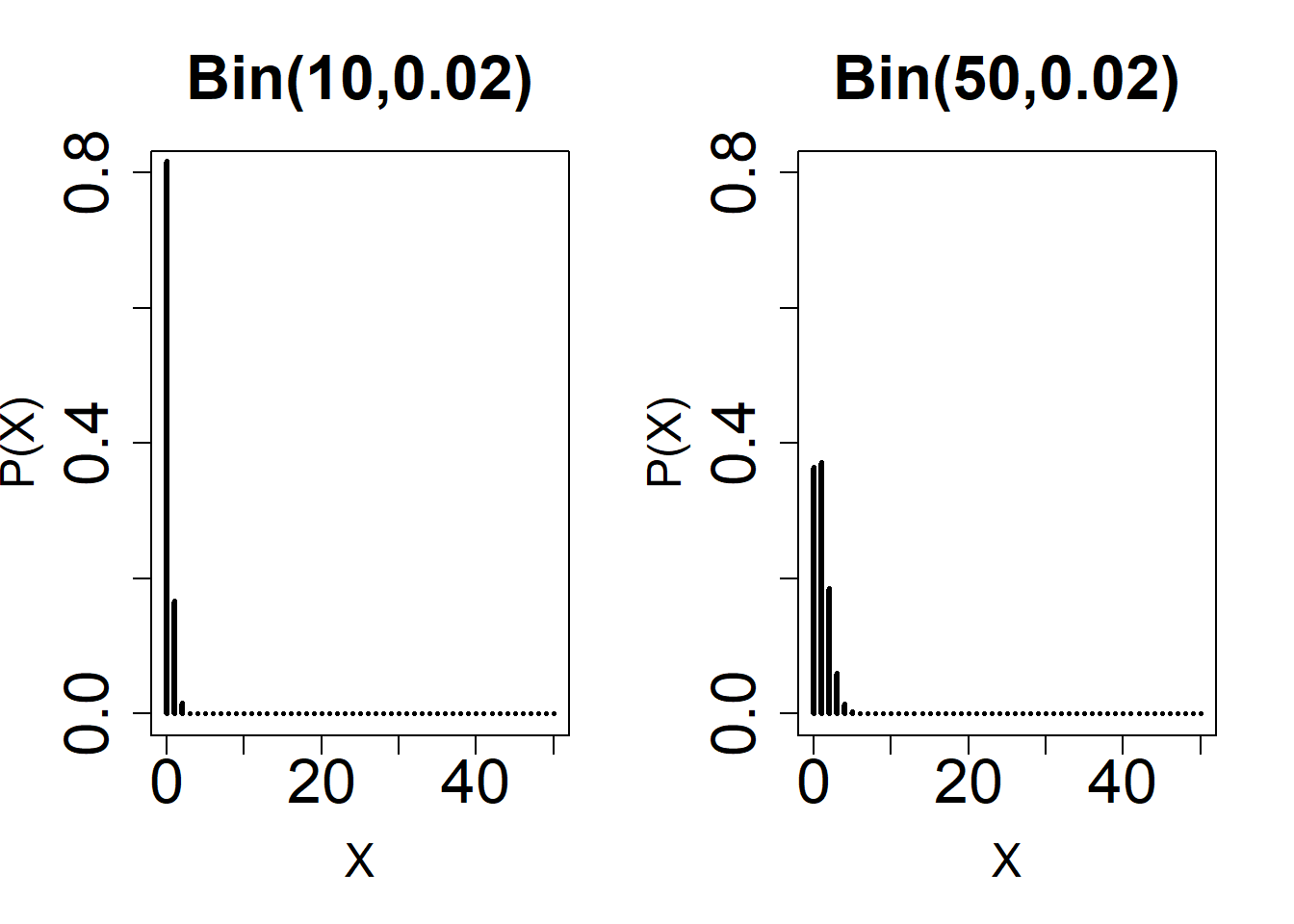

#3

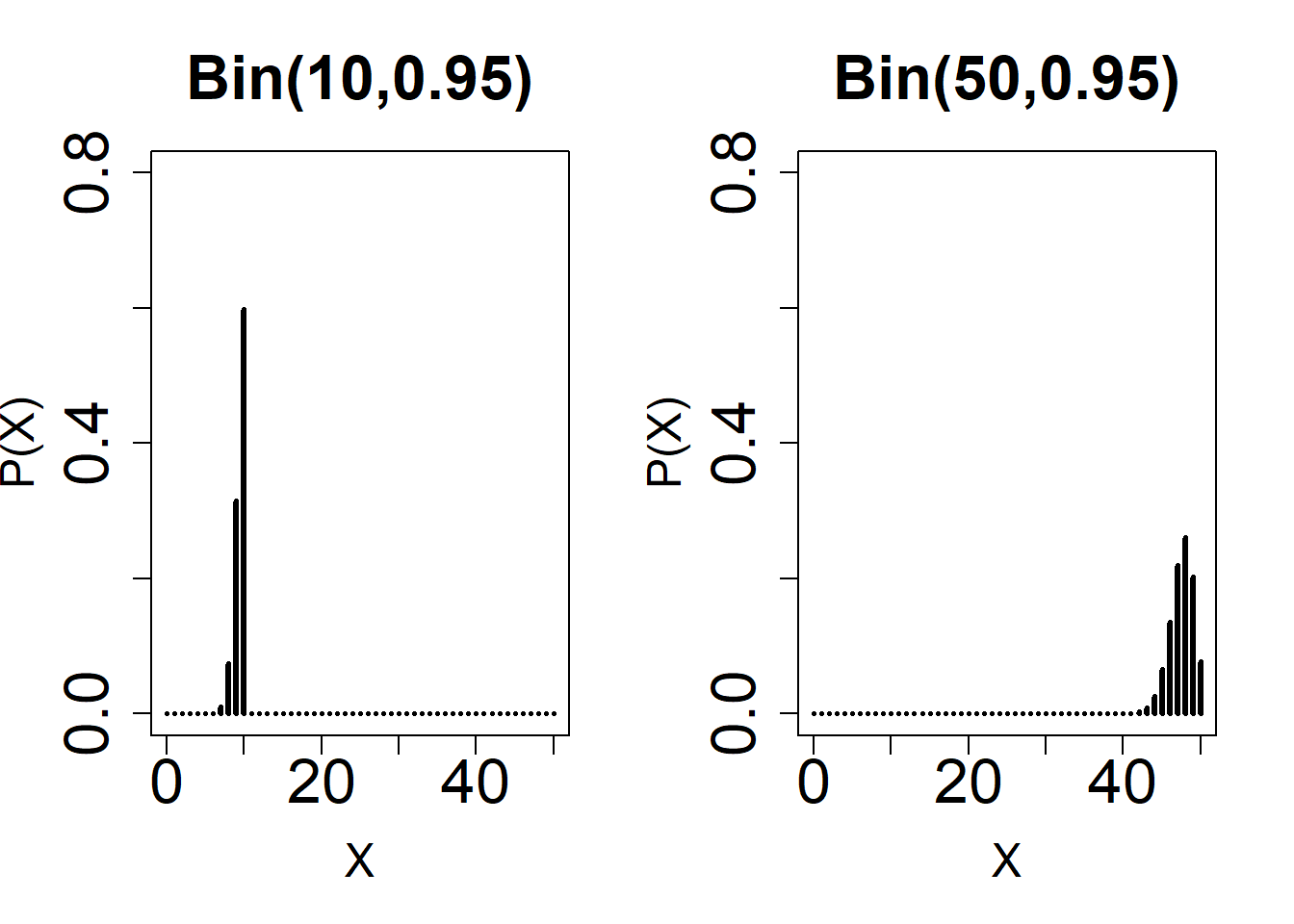

par(mfrow = c(1, 2))

plot(

0:50,

dbinom(0:50, 10, 0.02),

type = 'h',

ylim = c(0, 0.8),

xlab = 'X',

main = 'Bin(10,0.02)',

ylab = 'P(X)',

lwd = 3,

cex.lab = 1.5,

cex.axis = 2,

cex.main = 2

)

plot(

0:50,

dbinom(0:50, 50, 0.02),

type = 'h',

ylim = c(0, 0.8),

xlab = 'X',

main = 'Bin(50,0.02)',

ylab = 'P(X)',

lwd = 3,

cex.lab = 1.5,

cex.axis = 2,

cex.main = 2

)

#4

par(mfrow = c(1, 2))

plot(

0:50,

dbinom(0:50, 10, 0.95),

type = 'h',

ylim = c(0, 0.8),

xlab = 'X',

main = 'Bin(10,0.95)',

ylab = 'P(X)',

lwd = 3,

cex.lab = 1.5,

cex.axis = 2,

cex.main = 2

)

plot(

0:50,

dbinom(0:50, 50, 0.95),

type = 'h',

ylim = c(0, 0.8),

xlab = 'X',

main = 'Bin(50,0.95)',

ylab = 'P(X)',

lwd = 3,

cex.lab = 1.5,

cex.axis = 2,

cex.main = 2

)

################################################################

# In a survey of 300 randomly selected drivers, 125 claimed that

# they regularly wear seat belts. Can we conclude from these data

# that the population proportion who regularly wear seat belts is 0.50?

# Perform a hypothesis test and

# Construct a 95% confidence interval for the true population proportion.

################################################################

# p_hat=125/300

# p0=0.50

# Prop.test performs a chi-squared test and not a z-test.

prop.test(125, 300, p = 0.5)##

## 1-sample proportions test with continuity correction

##

## data: 125 out of 300, null probability 0.5

## X-squared = 8.0033, df = 1, p-value = 0.004669

## alternative hypothesis: true p is not equal to 0.5

## 95 percent confidence interval:

## 0.3606621 0.4748409

## sample estimates:

## p

## 0.4166667# Create your own function to perform a one-sample proportion test

# and create a 100(1-alpha) CI using the Normal Approximation

one.proptest_norm <-

function(x,

n,

p = NULL,

conf.level = 0.95,

alternative = "less") {

# x the number of 'cases' in the sample

# n the total sample size

# p is the hypothesized value

z.stat <- NULL

cint <- NULL

p.val <- NULL

phat <- x / n

qhat <- 1 - phat

if (length(p) > 0) {

q <- 1 - p

SE.phat <- sqrt((p * q) / n)

z.stat <- (phat - p) / SE.phat

p.val <- pnorm(z.stat)

if (alternative == "two.sided") {

p.val <- p.val * 2

}

if (alternative == "greater") {

p.val <- 1 - p.val

}

} else {

# Construct a confidence interval

SE.phat <- sqrt((phat * qhat) / n)

}

cint <-

phat + c(-1 * ((qnorm(((1 - conf.level) / 2

) + conf.level)) * SE.phat),

((qnorm(((1 - conf.level) / 2

) + conf.level)) * SE.phat))

return(list(

estimate = phat,

z.stat = z.stat,

p.val = p.val,

cint = cint

))

}

# In our example:

one.proptest_norm(125, 300, 0.5, alternative = "two.sided")## $estimate

## [1] 0.4166667

##

## $z.stat

## [1] -2.886751

##

## $p.val

## [1] 0.003892417

##

## $cint

## [1] 0.3600874 0.4732460# P-hat estimate

#0.417

# Z-statistic: z.stat

# -2.886

#$p.val

#0.004

# 95 % CI: (0.360, 0.473)

####################################################################

# Perform an Exact test, no normal approximation #

# This function uses Clopper-Pearson method #

####################################################################

binom.test(125,

300,

p = 0.5,

alternative = "two.sided",

conf.level = 0.95)##

## Exact binomial test

##

## data: 125 and 300

## number of successes = 125, number of trials = 300, p-value = 0.004589

## alternative hypothesis: true probability of success is not equal to 0.5

## 95 percent confidence interval:

## 0.3602804 0.4747154

## sample estimates:

## probability of success

## 0.4166667# 95% Exact CI: (0.360, 0.474)

# Exact p-value: 0.004Contingency Table Method

#################################################################

# Biostatistical Methods I #

# Contingency Tables: Tests for Categorical Data #

# Author: Cody Chiuzan #

#################################################################

################################################################

# Chi-Squared Test #

################################################################

# Marijuana usage among colleg students

# Chi-squared test for homogeneity

drug_data <-

matrix(

c(57, 50, 43, 57, 58, 20, 56, 45, 24, 45, 22, 33),

nrow = 4,

ncol = 3,

byrow = T,

dimnames = list(

c("freshman", "sophomore", "junior", "senior"),

c("experimental", "casual", "modheavy")

)

)

drug_data## experimental casual modheavy

## freshman 57 50 43

## sophomore 57 58 20

## junior 56 45 24

## senior 45 22 33chisq.test(drug_data)##

## Pearson's Chi-squared test

##

## data: drug_data

## X-squared = 19.369, df = 6, p-value = 0.003584# X-squared = 19.369, df = 6, p-value = 0.003584

# Critical value: qchisq(0.95,6) = 12.59

# We reject the null hypothesis and conclude that the proportions of marijuana usage are different among classes

###

# Association b/w pelvic inflammatory disease and ectopic pregnancy

# Chi-squared test for independence

preg_data <- matrix(

c(28, 6, 251, 273),

nrow = 2,

ncol = 2,

byrow = T,

dimnames = list(c("PID", "No PID"),

c("Ect Preg", "No Ect Preg"))

)

preg_data## Ect Preg No Ect Preg

## PID 28 6

## No PID 251 273chisq.test(preg_data)##

## Pearson's Chi-squared test with Yates' continuity correction

##

## data: preg_data

## X-squared = 13.812, df = 1, p-value = 0.000202# Get the expected values: chisq.test(preg_data)$expected

# X-squared with Yates' correction

# X-squared=13.81, df=1, p-value=0.0002

# Critical value: qchisq(0.95,1) = 3.84

# We reject the null and conclude that there is sufficient evidence that PID and ectopic pregnancy are associated.

###

# What if we have raw data? How do we perform a chi-squared test?

# Use R data 'quine' (library MASS) to compare gender distribution between ethnicities.

library(MASS)

data(quine)

names(quine)## [1] "Eth" "Sex" "Age" "Lrn" "Days"# Create a 2x2 table

table(quine$Sex, quine$Eth)##

## A N

## F 38 42

## M 31 35# Compute row percentages

prop.table(table(quine$Sex, quine$Eth), 1)##

## A N

## F 0.475000 0.525000

## M 0.469697 0.530303# Chi-squared without continuity correction.

chisq.test(table(quine$Sex, quine$Eth), correct = F)##

## Pearson's Chi-squared test

##

## data: table(quine$Sex, quine$Eth)

## X-squared = 0.0040803, df = 1, p-value = 0.9491# X-squared = 0.004, df = 1, p-value = 0.949. Not a significant difference.

# Chi-squared with continuity correction.

chisq.test(table(quine$Sex, quine$Eth), correct = T)##

## Pearson's Chi-squared test with Yates' continuity correction

##

## data: table(quine$Sex, quine$Eth)

## X-squared = 0, df = 1, p-value = 1# X-squared ~ 0, df = 1, p-value = 1. Not a significant difference.

#################################################################

# Biostatistical Methods I #

# Contingency Tables: Tests for Categorical Data #

# Author: Cody Chiuzan #

#################################################################

###############################################################

# Fisher's Exact test for small cell counts (Eij < 5) #

###############################################################

# Tea-time experiment

# One-tailed test: calculations were also made in the lecture notes

tea_exp <- matrix(c(3, 1, 1, 3), nrow = 2)

fisher.test(tea_exp, alternative = "greater")##

## Fisher's Exact Test for Count Data

##

## data: tea_exp

## p-value = 0.2429

## alternative hypothesis: true odds ratio is greater than 1

## 95 percent confidence interval:

## 0.3135693 Inf

## sample estimates:

## odds ratio

## 6.408309# P-val=0.2429, fail to conclude discriminating ability

# Two-sided Fisher test

fisher.test(tea_exp)##

## Fisher's Exact Test for Count Data

##

## data: tea_exp

## p-value = 0.4857

## alternative hypothesis: true odds ratio is not equal to 1

## 95 percent confidence interval:

## 0.2117329 621.9337505

## sample estimates:

## odds ratio

## 6.408309# P-val=0.4857

# CI is for the odds ratio, not difference in proportions

# What if we used a chi-square instead? Not ideal as the exp freq are <5

chisq.test(tea_exp)## Warning in chisq.test(tea_exp): Chi-squared approximation may be incorrect##

## Pearson's Chi-squared test with Yates' continuity correction

##

## data: tea_exp

## X-squared = 0.5, df = 1, p-value = 0.4795# P-val=0.4795

# Notice that the two-sided p-value from Fisher is greater than the one generated by chi-square

# This supports the conclusion that Fisher Exat Test is more conservative (harder to reject)

practice_data <- matrix(c(1, 8, 9, 3), nrow = 2,

dimnames = list(c("diet", "non-diet"), c("men", "women")))

chisq.test(practice_data)## Warning in chisq.test(practice_data): Chi-squared approximation may be incorrect##

## Pearson's Chi-squared test with Yates' continuity correction

##

## data: practice_data

## X-squared = 6.0494, df = 1, p-value = 0.01391# Pearson's Chi-squared test with Yates' continuity correction for 2X2 table

# X-squared = 6.0494, df = 1, p-value = 0.01391

# Warning message:

# In chisq.test(practice_data) : Chi-squared approximation may be incorrect

fisher.test(practice_data)##

## Fisher's Exact Test for Count Data

##

## data: practice_data

## p-value = 0.007519

## alternative hypothesis: true odds ratio is not equal to 1

## 95 percent confidence interval:

## 0.0008560335 0.6145334348

## sample estimates:

## odds ratio

## 0.05080595# Fisher's Exact Test for Count Data

# p-value = 0.007519 # Notice the difference in p-values b/w chi-squared and Fisher

###############################################################

# McNemar Test for binomial matched-pair data #

# Normal approximation #

###############################################################

# Two procedures are tested on the same 75 subjects

# in order to identify the absence/presence of the disease

procedure_data <- matrix(

c(41, 8, 14, 12),

nrow = 2,

byrow = T,

dimnames = list(c("positive", "negative"), c("positive", "negative"))

)

mcnemar.test(procedure_data)##

## McNemar's Chi-squared test with continuity correction

##

## data: procedure_data

## McNemar's chi-squared = 1.1364, df = 1, p-value = 0.2864# McNemar's Chi-squared test with continuity correction

# McNemar's Chi-squared = 1.1364, df = 1, p-value = 0.2864

# What if you performed a chi-squared test instead?

chisq.test(procedure_data)##

## Pearson's Chi-squared test with Yates' continuity correction

##

## data: procedure_data

## X-squared = 6.278, df = 1, p-value = 0.01222# X-squared = 6.278, df = 1, p-value = 0.01222

# Notice that the conclusions would be totally different.Regression

Hide

SLR

################################################################

# Biostatistical Methods I #

# Simple Linear Regression #

################################################################

library(faraway)##

## Attaching package: 'faraway'## The following objects are masked from 'package:survival':

##

## rats, solderlibrary(broom)

library(dplyr)

# Load data diabetes

data(diabetes)

names(diabetes)## [1] "id" "chol" "stab.glu" "hdl" "ratio" "glyhb"

## [7] "location" "age" "gender" "height" "weight" "frame"

## [13] "bp.1s" "bp.1d" "bp.2s" "bp.2d" "waist" "hip"

## [19] "time.ppn"summary(diabetes)## id chol stab.glu hdl

## Min. : 1000 Min. : 78.0 Min. : 48.0 Min. : 12.00

## 1st Qu.: 4792 1st Qu.:179.0 1st Qu.: 81.0 1st Qu.: 38.00

## Median :15766 Median :204.0 Median : 89.0 Median : 46.00

## Mean :15978 Mean :207.8 Mean :106.7 Mean : 50.45

## 3rd Qu.:20336 3rd Qu.:230.0 3rd Qu.:106.0 3rd Qu.: 59.00

## Max. :41756 Max. :443.0 Max. :385.0 Max. :120.00

## NA's :1 NA's :1

## ratio glyhb location age gender

## Min. : 1.500 Min. : 2.68 Buckingham:200 Min. :19.00 male :169

## 1st Qu.: 3.200 1st Qu.: 4.38 Louisa :203 1st Qu.:34.00 female:234

## Median : 4.200 Median : 4.84 Median :45.00

## Mean : 4.522 Mean : 5.59 Mean :46.85

## 3rd Qu.: 5.400 3rd Qu.: 5.60 3rd Qu.:60.00

## Max. :19.300 Max. :16.11 Max. :92.00

## NA's :1 NA's :13

## height weight frame bp.1s bp.1d

## Min. :52.00 Min. : 99.0 small :104 Min. : 90.0 Min. : 48.00

## 1st Qu.:63.00 1st Qu.:151.0 medium:184 1st Qu.:121.2 1st Qu.: 75.00

## Median :66.00 Median :172.5 large :103 Median :136.0 Median : 82.00

## Mean :66.02 Mean :177.6 NA's : 12 Mean :136.9 Mean : 83.32

## 3rd Qu.:69.00 3rd Qu.:200.0 3rd Qu.:146.8 3rd Qu.: 90.00

## Max. :76.00 Max. :325.0 Max. :250.0 Max. :124.00

## NA's :5 NA's :1 NA's :5 NA's :5

## bp.2s bp.2d waist hip

## Min. :110.0 Min. : 60.00 Min. :26.0 Min. :30.00

## 1st Qu.:138.0 1st Qu.: 84.00 1st Qu.:33.0 1st Qu.:39.00

## Median :149.0 Median : 92.00 Median :37.0 Median :42.00

## Mean :152.4 Mean : 92.52 Mean :37.9 Mean :43.04

## 3rd Qu.:161.0 3rd Qu.:100.00 3rd Qu.:41.0 3rd Qu.:46.00

## Max. :238.0 Max. :124.00 Max. :56.0 Max. :64.00

## NA's :262 NA's :262 NA's :2 NA's :2

## time.ppn

## Min. : 5.0

## 1st Qu.: 90.0

## Median : 240.0

## Mean : 341.2

## 3rd Qu.: 517.5

## Max. :1560.0

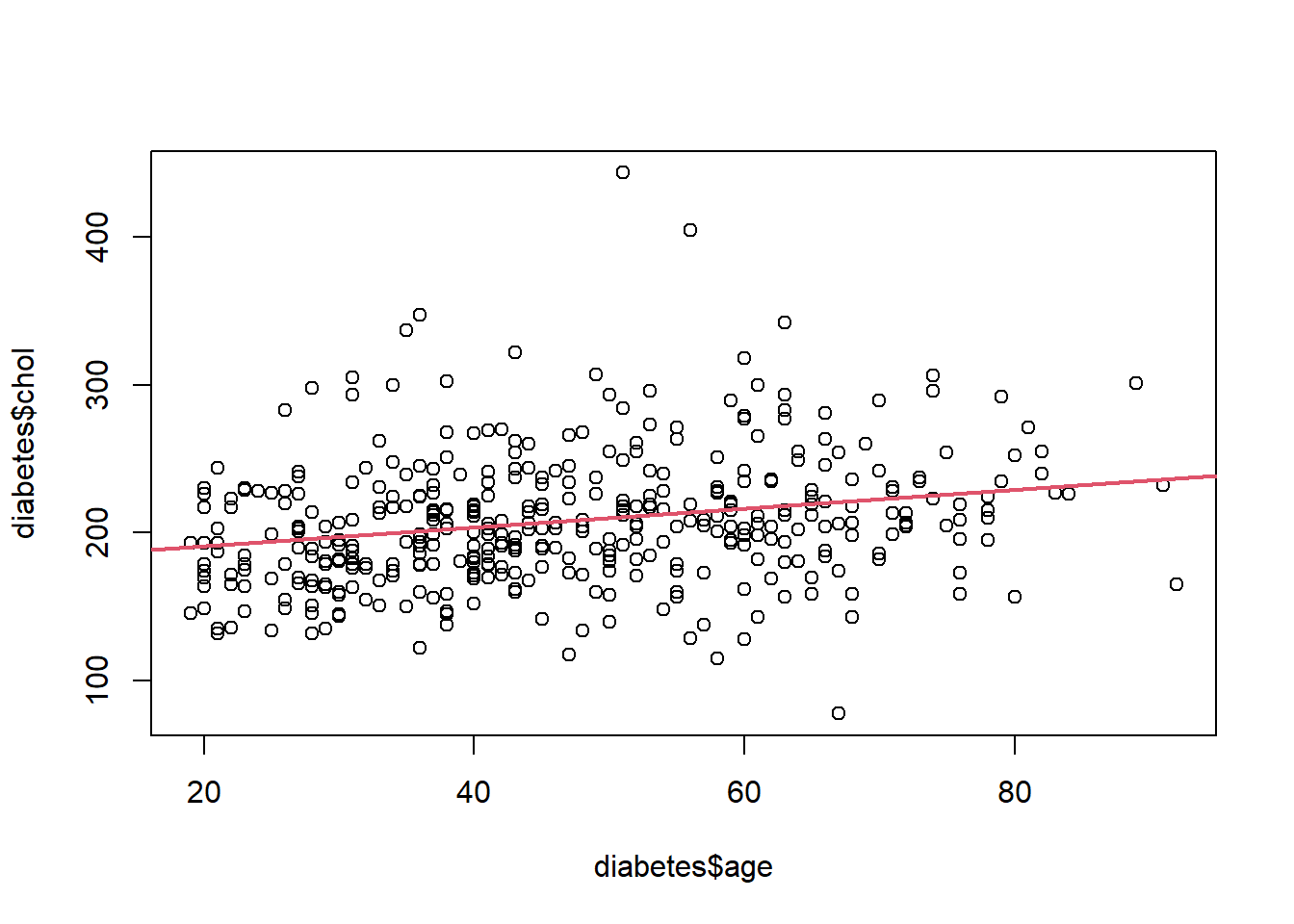

## NA's :3# Plot (Y) chol vs age (X)

plot(diabetes$age, diabetes$chol)

reg_diab<-lm(diabetes$chol~diabetes$age)

# Summarize regression

summary(reg_diab)##

## Call:

## lm(formula = diabetes$chol ~ diabetes$age)

##

## Residuals:

## Min 1Q Median 3Q Max

## -142.630 -25.225 -5.206 24.238 232.520

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 178.1260 6.5638 27.138 < 2e-16 ***

## diabetes$age 0.6344 0.1323 4.794 2.3e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 43.27 on 400 degrees of freedom

## (1 observation deleted due to missingness)

## Multiple R-squared: 0.05434, Adjusted R-squared: 0.05198

## F-statistic: 22.99 on 1 and 400 DF, p-value: 2.304e-06tidy(reg_diab)## # A tibble: 2 x 5

## term estimate std.error statistic p.value

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 (Intercept) 178. 6.56 27.1 9.90e-93

## 2 diabetes$age 0.634 0.132 4.79 2.30e- 6glance(reg_diab)## # A tibble: 1 x 12

## r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0.0543 0.0520 43.3 23.0 2.30e-6 1 -2084. 4174. 4186.

## # ... with 3 more variables: deviance <dbl>, df.residual <int>, nobs <int># Regression objects

names(reg_diab)## [1] "coefficients" "residuals" "effects" "rank"

## [5] "fitted.values" "assign" "qr" "df.residual"

## [9] "na.action" "xlevels" "call" "terms"

## [13] "model"# Get fitted.values

reg_diab$fitted.values## 1 2 3 4 5 6 7 8

## 207.3076 196.5231 214.9203 220.6297 218.7266 199.6950 197.1575 201.5982

## 9 10 11 12 13 14 15 16

## 206.6733 213.0171 216.1890 202.2326 195.2544 203.5013 200.9638 199.0607

## 17 18 19 20 21 22 23 24

## 209.8452 190.8137 200.9638 217.4578 222.5329 207.9420 202.2326 219.9953

## 25 26 27 29 30 31 32 33

## 193.3512 204.1357 201.5982 205.4045 203.5013 204.7701 211.1140 216.8234

## 34 35 36 37 38 39 40 41

## 216.8234 193.9856 207.9420 200.3294 207.3076 214.2859 222.5329 192.0824

## 42 43 44 45 46 47 48 49

## 211.1140 200.9638 205.4045 223.8016 201.5982 212.3827 216.1890 203.5013

## 50 51 52 53 54 55 56 57

## 213.0171 226.3392 205.4045 219.3609 206.6733 222.5329 190.8137 217.4578

## 58 59 60 61 62 63 64 65

## 236.4893 209.2108 206.0389 225.0704 200.9638 210.4796 202.2326 197.7919

## 66 67 68 69 70 71 72 73

## 195.8887 192.0824 223.1672 226.3392 235.8549 203.5013 192.7168 190.8137

## 74 75 76 77 78 79 80 81

## 203.5013 211.1140 226.3392 207.3076 208.5764 192.0824 214.9203 199.6950

## 82 83 84 85 86 87 88 89

## 216.8234 203.5013 195.8887 211.7483 220.6297 210.4796 209.2108 219.3609

## 90 91 92 93 94 95 96 97

## 212.3827 202.2326 218.7266 204.1357 220.6297 195.2544 191.4481 204.1357

## 98 99 100 101 102 103 104 105

## 207.9420 216.8234 219.3609 195.8887 204.1357 201.5982 209.8452 214.2859

## 106 107 108 109 110 111 112 113

## 195.8887 197.7919 230.7798 228.2423 221.2641 198.4263 194.6200 200.9638

## 114 115 116 117 118 119 120 121

## 211.7483 190.1793 218.0922 214.9203 211.7483 209.8452 204.1357 208.5764

## 122 123 124 125 126 127 128 129

## 215.5546 199.6950 218.0922 192.7168 191.4481 192.7168 200.9638 223.1672

## 130 131 132 133 134 135 136 137

## 218.7266 205.4045 197.7919 206.0389 216.1890 205.4045 208.5764 213.6515

## 138 139 140 141 142 143 144 145

## 213.0171 209.2108 214.9203 199.0607 208.5764 219.9953 215.5546 206.6733

## 146 147 148 149 150 151 152 153

## 211.1140 226.3392 200.9638 204.1357 190.8137 209.8452 205.4045 230.1455

## 154 155 156 157 158 159 160 161

## 200.3294 207.9420 225.7048 217.4578 197.7919 209.8452 202.8670 199.0607

## 162 163 164 165 166 167 168 169

## 214.9203 229.5111 195.2544 207.9420 199.0607 220.6297 204.7701 191.4481

## 170 171 172 173 174 175 176 177

## 210.4796 195.2544 210.4796 223.1672 209.8452 212.3827 215.5546 215.5546

## 178 179 180 181 182 183 184 185

## 203.5013 214.9203 223.8016 219.9953 192.7168 204.7701 205.4045 225.7048

## 186 187 188 189 190 191 192 193

## 219.3609 199.6950 201.5982 216.8234 200.9638 206.6733 221.2641 214.2859

## 194 195 196 197 198 199 200 201

## 204.1357 221.2641 203.5013 228.2423 217.4578 218.0922 213.0171 213.0171

## 202 203 204 205 206 207 208 209

## 195.2544 219.9953 218.0922 227.6079 221.2641 197.7919 218.7266 203.5013

## 210 211 212 213 214 215 216 217

## 216.8234 195.8887 199.6950 218.0922 213.0171 194.6200 200.9638 203.5013

## 218 219 220 221 222 223 224 225

## 206.6733 221.2641 230.1455 216.1890 197.1575 204.1357 212.3827 223.8016

## 226 227 228 229 230 231 232 233

## 207.9420 209.8452 210.4796 206.6733 202.2326 190.8137 206.0389 218.0922

## 234 235 236 237 238 239 240 241

## 209.8452 206.0389 208.5764 204.1357 196.5231 226.3392 221.8985 194.6200

## 242 243 244 245 246 247 248 249

## 222.5329 193.9856 204.7701 213.6515 197.7919 197.7919 195.2544 224.4360

## 250 251 252 253 254 255 256 257

## 198.4263 190.1793 223.1672 195.2544 197.7919 190.8137 197.7919 217.4578

## 258 259 260 261 262 263 264 265

## 206.0389 200.9638 200.9638 207.9420 197.1575 218.0922 208.5764 219.3609

## 266 267 268 269 270 271 272 273

## 215.5546 201.5982 227.6079 192.7168 202.2326 202.2326 204.1357 196.5231

## 274 275 276 277 278 279 280 281

## 209.2108 192.7168 196.5231 203.5013 202.2326 203.5013 196.5231 227.6079

## 282 283 284 285 286 287 288 289

## 209.8452 192.7168 216.1890 203.5013 216.1890 203.5013 197.1575 191.4481

## 290 291 292 293 294 295 296 297

## 218.0922 218.0922 205.4045 207.3076 218.7266 213.6515 200.3294 215.5546

## 298 299 300 301 302 303 304 305

## 192.0824 205.4045 194.6200 204.1357 205.4045 190.8137 195.8887 197.1575

## 306 307 308 309 310 311 312 313

## 219.9953 190.8137 198.4263 202.2326 216.8234 194.6200 225.0704 223.8016

## 314 315 316 317 318 319 320 321

## 191.4481 200.9638 204.7701 219.9953 199.6950 205.4045 214.2859 206.6733

## 322 323 324 325 326 327 328 329

## 206.0389 195.2544 218.0922 219.3609 197.1575 195.8887 204.1357 197.7919

## 330 331 332 333 334 335 336 337

## 199.0607 219.9953 195.8887 193.9856 194.6200 203.5013 202.2326 197.1575

## 338 339 340 341 342 343 344 345

## 211.1140 192.0824 210.4796 206.6733 211.7483 191.4481 211.7483 201.5982

## 346 347 348 349 350 351 352 353

## 199.6950 197.1575 225.0704 200.9638 206.6733 200.3294 209.8452 195.2544

## 354 355 356 357 358 359 360 361

## 211.1140 204.7701 202.8670 224.4360 195.8887 211.7483 209.2108 213.0171

## 362 363 364 365 366 367 368 369

## 201.5982 216.1890 213.6515 231.4142 190.8137 228.8767 216.1890 228.8767

## 370 371 372 373 374 375 376 377

## 196.5231 205.4045 218.0922 201.5982 190.8137 206.0389 212.3827 214.9203

## 378 379 380 381 382 383 384 385

## 200.3294 211.1140 216.1890 205.4045 215.5546 199.0607 201.5982 203.5013

## 386 387 388 389 390 391 392 393

## 202.2326 198.4263 216.1890 197.1575 204.7701 211.1140 215.5546 227.6079

## 394 395 396 397 398 399 400 401

## 210.4796 193.9856 201.5982 212.3827 234.5861 211.7483 210.4796 196.5231

## 402 403

## 204.1357 221.2641

## attr(,"label")

## [1] "Total Cholesterol"

## attr(,"class")

## [1] "labelled"# Scatterplot and regression line overlaid

plot(diabetes$age, diabetes$chol)

abline(reg_diab,lwd=2,col=2)

# Calculate the regression coefficient estimates 'by hand'.

set.seed(1)

data = data.frame(x = rnorm(30, 3, 3)) %>% mutate(y = 2+.6*x +rnorm(30, 0, 1))

linmod = lm(y~x, data = data)

summary(linmod)##

## Call:

## lm(formula = y ~ x, data = data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -1.5202 -0.5050 -0.2297 0.5753 1.8534

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 2.08743 0.22958 9.092 7.53e-10 ***

## x 0.61396 0.05415 11.338 5.61e-12 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.8084 on 28 degrees of freedom

## Multiple R-squared: 0.8211, Adjusted R-squared: 0.8148

## F-statistic: 128.6 on 1 and 28 DF, p-value: 5.612e-12beta1 = with(data, sum((x - mean(x))*(y - mean(y))) / sum((x - mean(x))^2))

beta0 = with(data, mean(y) - beta1*mean(x))

c(beta0, beta1)## [1] 2.0874344 0.6139621# Notice the same values

################################################################

# Biostatistical Methods I #

# Simple Linear Regression - Inferences #

################################################################

# Load libraries

library(faraway)

library(broom)

library(dplyr)

# Read data 'Hospitals'

data_hosp<-read.csv(here::here("R_Code","R - Module 17","Hospital.csv"))

names(data_hosp)## [1] "ID" "LOS" "AGE" "INFRISK" "CULT" "XRAY" "BEDS"

## [8] "MEDSCHL" "REGION" "CENSUS" "NURSE" "FACS"# Look at data structure

str(data_hosp)## 'data.frame': 113 obs. of 12 variables:

## $ ID : int 1 2 3 4 5 6 7 8 9 10 ...

## $ LOS : num 7.13 8.82 8.34 8.95 11.2 ...

## $ AGE : num 55.7 58.2 56.9 53.7 56.5 50.9 57.8 45.7 48.2 56.3 ...

## $ INFRISK: num 4.1 1.6 2.7 5.6 5.7 5.1 4.6 5.4 4.3 6.3 ...

## $ CULT : num 9 3.8 8.1 18.9 34.5 21.9 16.7 60.5 24.4 29.6 ...

## $ XRAY : num 39.6 51.7 74 122.8 88.9 ...

## $ BEDS : int 279 80 107 147 180 150 186 640 182 85 ...

## $ MEDSCHL: int 2 2 2 2 2 2 2 1 2 2 ...

## $ REGION : int 4 2 3 4 1 2 3 2 3 1 ...

## $ CENSUS : int 207 51 82 53 134 147 151 399 130 59 ...

## $ NURSE : int 241 52 54 148 151 106 129 360 118 66 ...

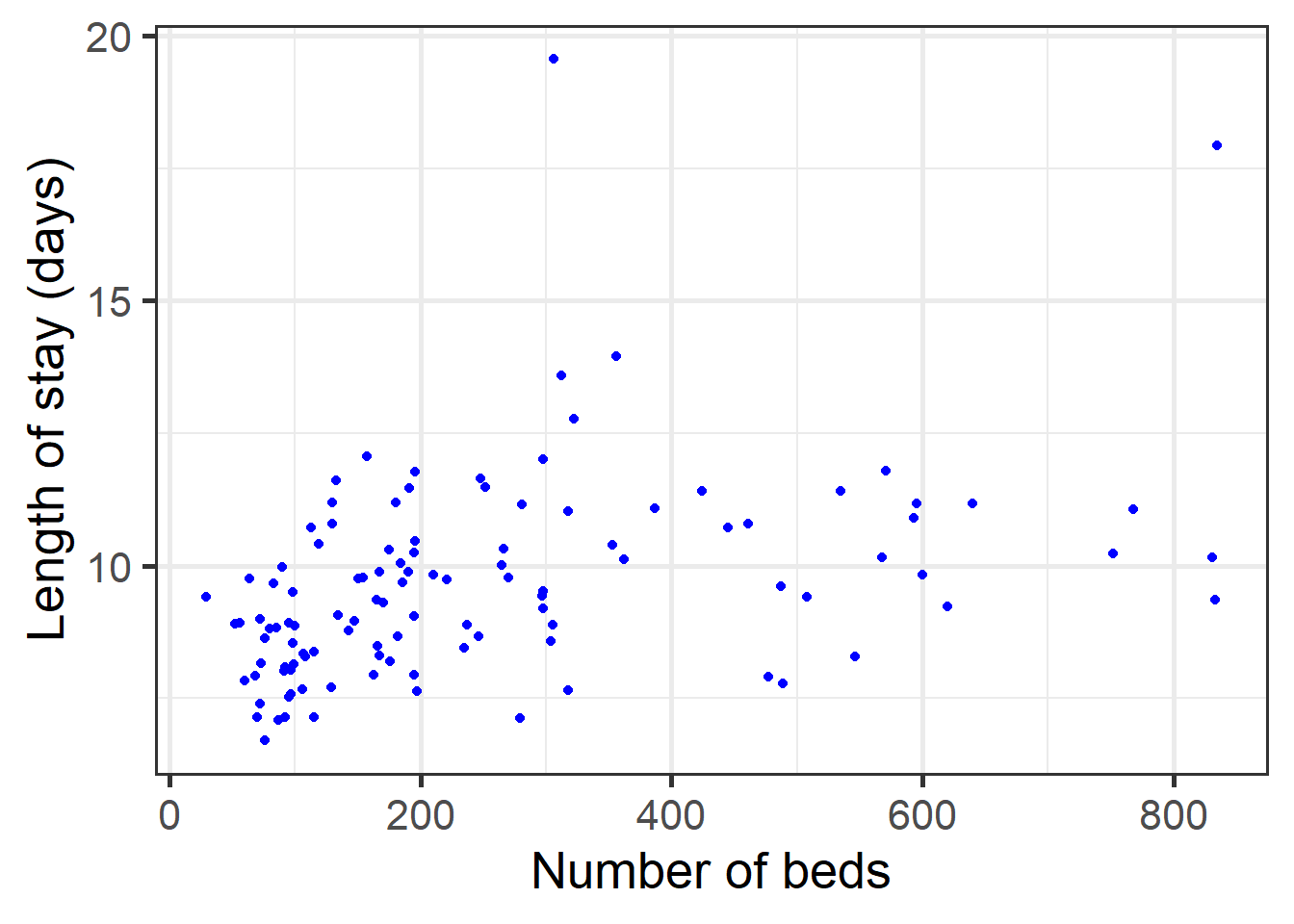

## $ FACS : num 60 40 20 40 40 40 40 60 40 40 ...# Scatter plot (Y) vs (X)

# LOS: length of stay(Y)

# BEDS: number of beds(X)

data_hosp %>%

ggplot(aes(BEDS, LOS)) + geom_point(color='blue') + theme_bw(base_size=20) +

labs(x="Number of beds", y="Length of stay (days)")

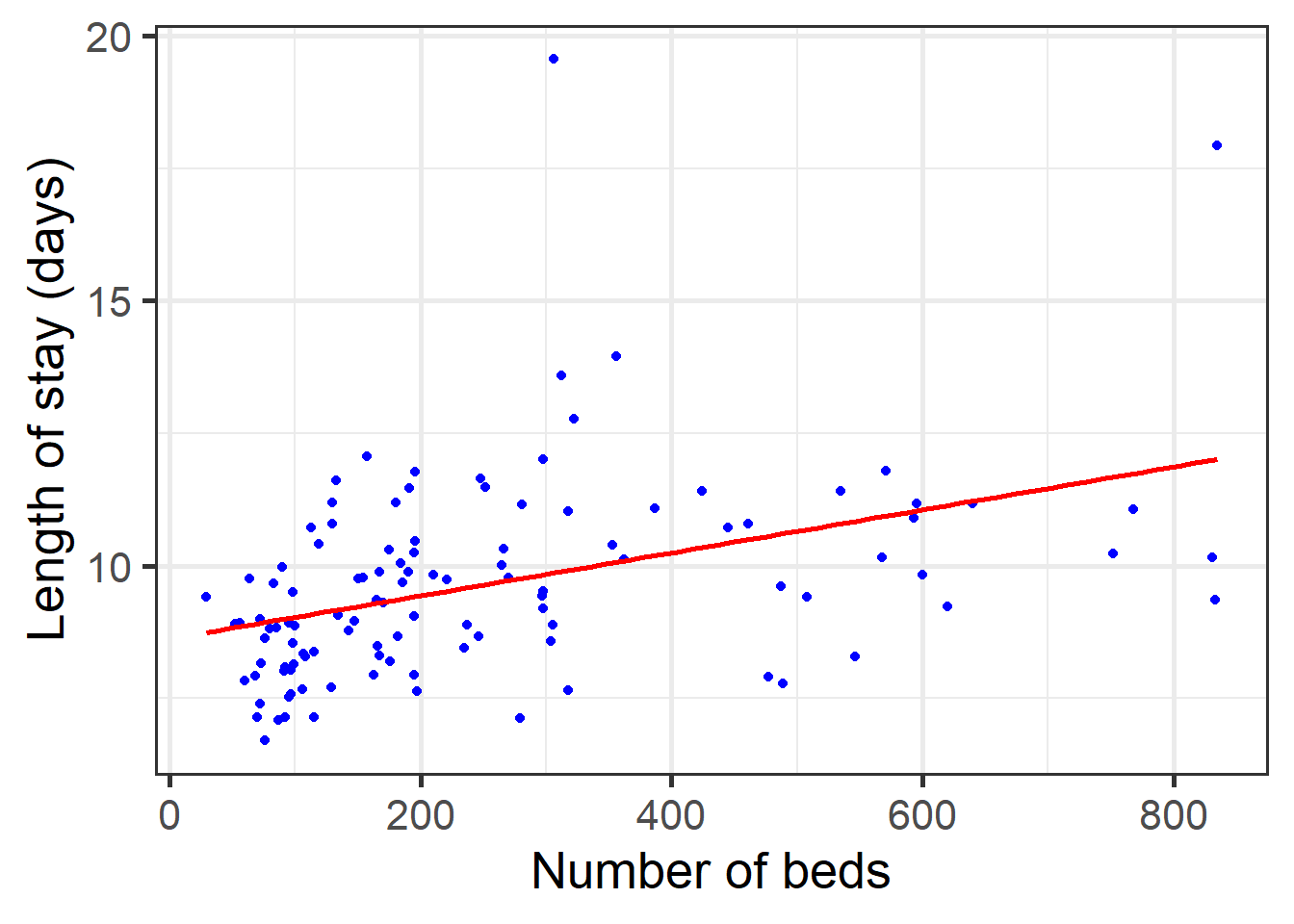

# Simple linear regression

reg_hos<-lm(data_hosp$LOS~data_hosp$BEDS)

# Analyze the regression results

summary(reg_hos)##

## Call:

## lm(formula = data_hosp$LOS ~ data_hosp$BEDS)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.8291 -1.0028 -0.1302 0.6782 9.6933

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 8.6253643 0.2720589 31.704 < 2e-16 ***

## data_hosp$BEDS 0.0040566 0.0008584 4.726 6.77e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.752 on 111 degrees of freedom

## Multiple R-squared: 0.1675, Adjusted R-squared: 0.16

## F-statistic: 22.33 on 1 and 111 DF, p-value: 6.765e-06# Get the ANOVA table

anova(reg_hos)## Analysis of Variance Table

##

## Response: data_hosp$LOS

## Df Sum Sq Mean Sq F value Pr(>F)

## data_hosp$BEDS 1 68.54 68.542 22.333 6.765e-06 ***

## Residuals 111 340.67 3.069

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1# Residual st error: MSE=sigma^2

glance(reg_hos)$sigma## [1] 1.75188# Scatter plot with regression line overlaid

data_hosp %>%

ggplot(aes(BEDS, LOS)) + geom_point(color='blue') + theme_bw(base_size=20) +

geom_smooth(method='lm', se=FALSE, color='red') +

labs(x="Number of beds", y="Length of stay (days)")## `geom_smooth()` using formula 'y ~ x'

# Scatter plot with regression line overlaid and 95% confidence bands

data_hosp %>%

ggplot(aes(BEDS, LOS)) + geom_point(color='blue') + theme_bw(base_size=20) +

geom_smooth(method='lm', se=TRUE, color='red') +

labs(x="Number of beds", y="Length of stay (days)")## `geom_smooth()` using formula 'y ~ x'

# How do we calculate the 95% CI for the slope?

# Interpretation: 95% CI for the expected/mean difference in LOS for 1 bed differene

# Get the critical t value for alpha=0.05 and n-2 df

qt(0.975,111) # In data hospital, df=n-2=113-2=111## [1] 1.981567coef<-summary(reg_hos)$coefficients[2,1]

err<-summary(reg_hos)$coefficients[2,2]

slope_int<-coef + c(-1,1)*err*qt(0.975, 111)

# CIs for both slope and intercept

confint(reg_hos)## 2.5 % 97.5 %

## (Intercept) 8.086261517 9.164467086

## data_hosp$BEDS 0.002355649 0.005757623confint(reg_hos,level=0.95)## 2.5 % 97.5 %

## (Intercept) 8.086261517 9.164467086

## data_hosp$BEDS 0.002355649 0.005757623# How do we calculate the 95% CI for 100 beds difference?

coef<-summary(reg_hos)$coefficients[2,1]

err<-summary(reg_hos)$coefficients[2,2]

slope_int100<-100*coef + c(-1,1)*(100*err)*qt(0.975, 111)

slope_int100## [1] 0.2355649 0.5757623#############################################################################

# Calculate 95% CIs using predict function

# If 'newdata' is omitted the predictions are based on the data used for the fit, like in the case below.

pred.clim <- predict.lm(reg_hos, interval="confidence")

datapred <- data.frame(cbind(data_hosp$BEDS, data_hosp$LOS, pred.clim))

plot(datapred[,1],datapred[,2],xlab="Number of Beds", ylab="Length of stay (days)")

#abline(reg_hos,lwd=2,col=2)

lines(datapred[,1],datapred[,3], lwd=2)

lines(datapred[,1],datapred[,5], lty=1, col=3, type='l')

lines(datapred[,1],datapred[,4], lty=1, col=3,type='l')

# Calculate 95% PIs for fitted values using predict function

# Compare to prediction intervals: of course that the PIs are wider than CIs

pred.plim <- predict.lm(reg_hos, interval="prediction") ## Warning in predict.lm(reg_hos, interval = "prediction"): predictions on current data refer to _future_ responsesdatapred1 <- data.frame(cbind(data_hosp$BEDS, data_hosp$LOS, pred.plim))

#abline(reg_hos,lwd=2,col=2)

lines(datapred1[,1],datapred1[,3], lwd=2)

lines(datapred1[,1],datapred1[,5], lty=1, col=2, type='l')

lines(datapred1[,1],datapred1[,4], lty=1, col=2,type='l')

##############################################################

# Calculate the correlation coefficient between LOS and BEDS

cor(data_hosp$LOS, data_hosp$BEDS)## [1] 0.4092652# Look at the R_squared. How does it compare to the correlation? Same value, but only for SLR.

cor(data_hosp$LOS, data_hosp$BEDS)^2## [1] 0.167498MLR

################################################################

# Biostatistical Methods I #

# Multiple Linear Regression #

################################################################

library(faraway)

library(broom)

library(dplyr)

# Read data 'Hospitals'

data_hosp<-read.csv(here::here("R_Code/R - Module 17/Hospital.csv"))

names(data_hosp)## [1] "ID" "LOS" "AGE" "INFRISK" "CULT" "XRAY" "BEDS"

## [8] "MEDSCHL" "REGION" "CENSUS" "NURSE" "FACS"# Scatter plot with regression line overlaid and 95% confidence bands

data_hosp %>%

ggplot(aes(BEDS, LOS)) + geom_point(color='blue') + theme_bw(base_size=20) +

geom_smooth(method='lm', se=TRUE, color='red') +

labs(x="Number of beds", y="Length of stay (days)")## `geom_smooth()` using formula 'y ~ x'

# Simple linear regression: Length of stay (LOS) vs number of BEDS

reg_hos<-lm(data_hosp$LOS~data_hosp$BEDS)

summary(reg_hos)##

## Call:

## lm(formula = data_hosp$LOS ~ data_hosp$BEDS)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.8291 -1.0028 -0.1302 0.6782 9.6933

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 8.6253643 0.2720589 31.704 < 2e-16 ***

## data_hosp$BEDS 0.0040566 0.0008584 4.726 6.77e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.752 on 111 degrees of freedom

## Multiple R-squared: 0.1675, Adjusted R-squared: 0.16

## F-statistic: 22.33 on 1 and 111 DF, p-value: 6.765e-06# Get the ANOVA table

anova(reg_hos)## Analysis of Variance Table

##

## Response: data_hosp$LOS

## Df Sum Sq Mean Sq F value Pr(>F)

## data_hosp$BEDS 1 68.54 68.542 22.333 6.765e-06 ***

## Residuals 111 340.67 3.069

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1# Matrix model

model.matrix(reg_hos)## (Intercept) data_hosp$BEDS

## 1 1 279

## 2 1 80

## 3 1 107

## 4 1 147

## 5 1 180

## 6 1 150

## 7 1 186

## 8 1 640

## 9 1 182

## 10 1 85

## 11 1 768

## 12 1 167

## 13 1 322

## 14 1 97

## 15 1 72

## 16 1 387

## 17 1 108

## 18 1 133

## 19 1 134

## 20 1 833

## 21 1 95

## 22 1 195

## 23 1 270

## 24 1 600

## 25 1 298

## 26 1 546

## 27 1 170

## 28 1 176

## 29 1 248

## 30 1 167

## 31 1 318

## 32 1 210

## 33 1 196

## 34 1 312

## 35 1 221

## 36 1 266

## 37 1 90

## 38 1 60

## 39 1 196

## 40 1 73

## 41 1 166

## 42 1 113

## 43 1 130

## 44 1 362

## 45 1 115

## 46 1 831

## 47 1 306

## 48 1 593

## 49 1 106

## 50 1 305

## 51 1 252

## 52 1 620

## 53 1 535

## 54 1 157

## 55 1 76

## 56 1 281

## 57 1 70

## 58 1 318

## 59 1 445

## 60 1 191

## 61 1 119

## 62 1 595

## 63 1 68

## 64 1 83

## 65 1 489

## 66 1 508

## 67 1 265

## 68 1 304

## 69 1 487

## 70 1 97

## 71 1 72

## 72 1 87

## 73 1 298

## 74 1 184

## 75 1 235

## 76 1 76

## 77 1 52

## 78 1 752

## 79 1 237

## 80 1 175

## 81 1 461

## 82 1 195

## 83 1 197

## 84 1 143

## 85 1 92

## 86 1 195

## 87 1 477

## 88 1 353

## 89 1 165

## 90 1 424

## 91 1 100

## 92 1 95

## 93 1 56

## 94 1 99

## 95 1 154

## 96 1 98

## 97 1 246

## 98 1 298

## 99 1 163

## 100 1 568

## 101 1 64

## 102 1 190

## 103 1 92

## 104 1 356

## 105 1 297

## 106 1 130

## 107 1 115

## 108 1 91

## 109 1 571

## 110 1 98

## 111 1 129

## 112 1 835

## 113 1 29

## attr(,"assign")

## [1] 0 1model.matrix(reg_hos) %>% head## (Intercept) data_hosp$BEDS

## 1 1 279

## 2 1 80

## 3 1 107

## 4 1 147

## 5 1 180

## 6 1 150# Multiple linear regression:

# Var 1: Number of BEDS

# Var 2: INFRISK (prob. % of getting an infection during hospitalization)

regmult1_hos<-lm(data_hosp$LOS~data_hosp$BEDS + data_hosp$INFRISK)

# Analyze the regression results

summary(regmult1_hos)##

## Call:

## lm(formula = data_hosp$LOS ~ data_hosp$BEDS + data_hosp$INFRISK)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.8624 -0.9904 -0.1996 0.6671 8.4219

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 6.2703521 0.5038751 12.444 < 2e-16 ***

## data_hosp$BEDS 0.0024747 0.0008236 3.005 0.00329 **

## data_hosp$INFRISK 0.6323812 0.1184476 5.339 5.08e-07 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.568 on 110 degrees of freedom

## Multiple R-squared: 0.3388, Adjusted R-squared: 0.3268

## F-statistic: 28.19 on 2 and 110 DF, p-value: 1.31e-10# Multiple linear regression: BEDS and INFRISK and NURSE

regmult2_hos<-lm(data_hosp$LOS~data_hosp$BEDS + data_hosp$INFRISK+data_hosp$NURSE)

summary(regmult2_hos)##

## Call:

## lm(formula = data_hosp$LOS ~ data_hosp$BEDS + data_hosp$INFRISK +

## data_hosp$NURSE)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.8692 -1.0457 -0.1371 0.9099 8.1339

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 6.148286 0.499875 12.300 < 2e-16 ***

## data_hosp$BEDS 0.006007 0.001882 3.192 0.00185 **

## data_hosp$INFRISK 0.674805 0.118463 5.696 1.05e-07 ***

## data_hosp$NURSE -0.005504 0.002645 -2.081 0.03982 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.545 on 109 degrees of freedom

## Multiple R-squared: 0.3641, Adjusted R-squared: 0.3466

## F-statistic: 20.8 on 3 and 109 DF, p-value: 9.915e-11# Multiple linear regression: BEDS and MEDSCHL (Medical School Affiliation: 1-Yes, 2-No)

# Recode MEDSCHL: Yes:1 and No:0

data_hosp$MS<-ifelse(data_hosp$MEDSCHL==1,1,ifelse(data_hosp$MEDSCHL==2, 0, NA))

# Multiple linear regression: INFRISK and new MS (Medical School Affiliation: 1-Yes, 0-No)

regmult3_hos<-lm(data_hosp$LOS~data_hosp$INFRISK +data_hosp$MS)

summary(regmult3_hos)##

## Call:

## lm(formula = data_hosp$LOS ~ data_hosp$INFRISK + data_hosp$MS)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.9037 -0.8739 -0.1142 0.5965 8.5568

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 6.4547 0.5146 12.542 <2e-16 ***

## data_hosp$INFRISK 0.6998 0.1156 6.054 2e-08 ***

## data_hosp$MS 0.9717 0.4316 2.251 0.0263 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.595 on 110 degrees of freedom

## Multiple R-squared: 0.3161, Adjusted R-squared: 0.3036

## F-statistic: 25.42 on 2 and 110 DF, p-value: 8.42e-10# Categorical predictor REGION: multiple levels

# Simple linear regression with predictor REGION (1:NE, 2:NC, 3:S, 4:W)

data_hosp %>% lm(LOS~REGION, data=.) %>% summary##

## Call:

## lm(formula = LOS ~ REGION, data = .)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.8883 -1.0283 -0.1464 0.7317 8.6417

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 11.8502 0.4017 29.497 < 2e-16 ***

## REGION -0.9319 0.1565 -5.956 3.09e-08 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.671 on 111 degrees of freedom

## Multiple R-squared: 0.2422, Adjusted R-squared: 0.2354

## F-statistic: 35.48 on 1 and 111 DF, p-value: 3.093e-08# How does it look?

# Make it a factor

data_hosp %>% lm(LOS~factor(REGION), data=.) %>% summary##

## Call:

## lm(formula = LOS ~ factor(REGION), data = .)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.0589 -1.0314 -0.0234 0.6811 8.4711

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 11.0889 0.3165 35.040 < 2e-16 ***

## factor(REGION)2 -1.4055 0.4333 -3.243 0.00157 **

## factor(REGION)3 -1.8976 0.4194 -4.524 1.55e-05 ***

## factor(REGION)4 -2.9752 0.5248 -5.669 1.19e-07 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.675 on 109 degrees of freedom

## Multiple R-squared: 0.2531, Adjusted R-squared: 0.2325

## F-statistic: 12.31 on 3 and 109 DF, p-value: 5.376e-07# Compare intercept model

data_hosp %>% lm(LOS~factor(REGION), data=.) %>% summary##

## Call:

## lm(formula = LOS ~ factor(REGION), data = .)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.0589 -1.0314 -0.0234 0.6811 8.4711

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 11.0889 0.3165 35.040 < 2e-16 ***

## factor(REGION)2 -1.4055 0.4333 -3.243 0.00157 **

## factor(REGION)3 -1.8976 0.4194 -4.524 1.55e-05 ***

## factor(REGION)4 -2.9752 0.5248 -5.669 1.19e-07 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.675 on 109 degrees of freedom

## Multiple R-squared: 0.2531, Adjusted R-squared: 0.2325

## F-statistic: 12.31 on 3 and 109 DF, p-value: 5.376e-07# To No intercept model

data_hosp %>% lm(LOS~0+factor(REGION), data=.) %>% summary##

## Call:

## lm(formula = LOS ~ 0 + factor(REGION), data = .)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.0589 -1.0314 -0.0234 0.6811 8.4711

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## factor(REGION)1 11.0889 0.3165 35.04 <2e-16 ***

## factor(REGION)2 9.6834 0.2960 32.71 <2e-16 ***

## factor(REGION)3 9.1914 0.2753 33.39 <2e-16 ***

## factor(REGION)4 8.1137 0.4186 19.38 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.675 on 109 degrees of freedom

## Multiple R-squared: 0.972, Adjusted R-squared: 0.971

## F-statistic: 947 on 4 and 109 DF, p-value: < 2.2e-16# Change the reference category for REGION (from 1 to 3)

# Intercept added

data_hosp %>% mutate(REGION=relevel(factor(REGION),ref=3)) %>% lm(LOS~factor(REGION), data=.) %>% summary##

## Call:

## lm(formula = LOS ~ factor(REGION), data = .)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.0589 -1.0314 -0.0234 0.6811 8.4711

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 9.1914 0.2753 33.387 < 2e-16 ***

## factor(REGION)1 1.8976 0.4194 4.524 1.55e-05 ***

## factor(REGION)2 0.4921 0.4043 1.217 0.2261

## factor(REGION)4 -1.0776 0.5010 -2.151 0.0337 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.675 on 109 degrees of freedom

## Multiple R-squared: 0.2531, Adjusted R-squared: 0.2325

## F-statistic: 12.31 on 3 and 109 DF, p-value: 5.376e-07# Multiple linear regression: INFRISK, new MS and Region (1:NE, 2:NC, 3:S, 4:W)

regmult4_hos<-lm(data_hosp$LOS~data_hosp$INFRISK +data_hosp$MS+factor(data_hosp$REGION))

summary(regmult4_hos)##

## Call:

## lm(formula = data_hosp$LOS ~ data_hosp$INFRISK + data_hosp$MS +

## factor(data_hosp$REGION))

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.9744 -0.6985 -0.1582 0.5349 7.6219

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 7.9165 0.5641 14.035 < 2e-16 ***

## data_hosp$INFRISK 0.6187 0.1046 5.915 4.03e-08 ***

## data_hosp$MS 0.9243 0.3816 2.422 0.0171 *

## factor(data_hosp$REGION)2 -1.1537 0.3660 -3.152 0.0021 **

## factor(data_hosp$REGION)3 -1.2298 0.3635 -3.384 0.0010 **

## factor(data_hosp$REGION)4 -2.6290 0.4412 -5.959 3.29e-08 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.399 on 107 degrees of freedom

## Multiple R-squared: 0.4885, Adjusted R-squared: 0.4646

## F-statistic: 20.44 on 5 and 107 DF, p-value: 2.843e-14# 'General' global test for all predictors

anova(regmult4_hos)## Analysis of Variance Table

##

## Response: data_hosp$LOS

## Df Sum Sq Mean Sq F value Pr(>F)

## data_hosp$INFRISK 1 116.446 116.446 59.5250 6.693e-12 ***

## data_hosp$MS 1 12.897 12.897 6.5927 0.01162 *

## factor(data_hosp$REGION) 3 70.549 23.516 12.0211 7.640e-07 ***

## Residuals 107 209.319 1.956

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1# Multiple linear regression: new MS, Region (1:NE, 2:NC, 3:S, 4:W) and their interaction

regmult5_hos<-lm(LOS~INFRISK*MS, data=data_hosp)

summary(regmult5_hos)##

## Call:

## lm(formula = LOS ~ INFRISK * MS, data = data_hosp)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.8986 -0.8504 -0.1881 0.7271 8.5990

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 6.53307 0.54276 12.037 < 2e-16 ***

## INFRISK 0.68122 0.12254 5.559 1.94e-07 ***

## MS 0.07814 1.95075 0.040 0.968

## INFRISK:MS 0.17859 0.38013 0.470 0.639

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.601 on 109 degrees of freedom

## Multiple R-squared: 0.3175, Adjusted R-squared: 0.2987

## F-statistic: 16.9 on 3 and 109 DF, p-value: 4.391e-09# Vizualize interaction for reg5: LOS vs INFRISK by MS affiliation

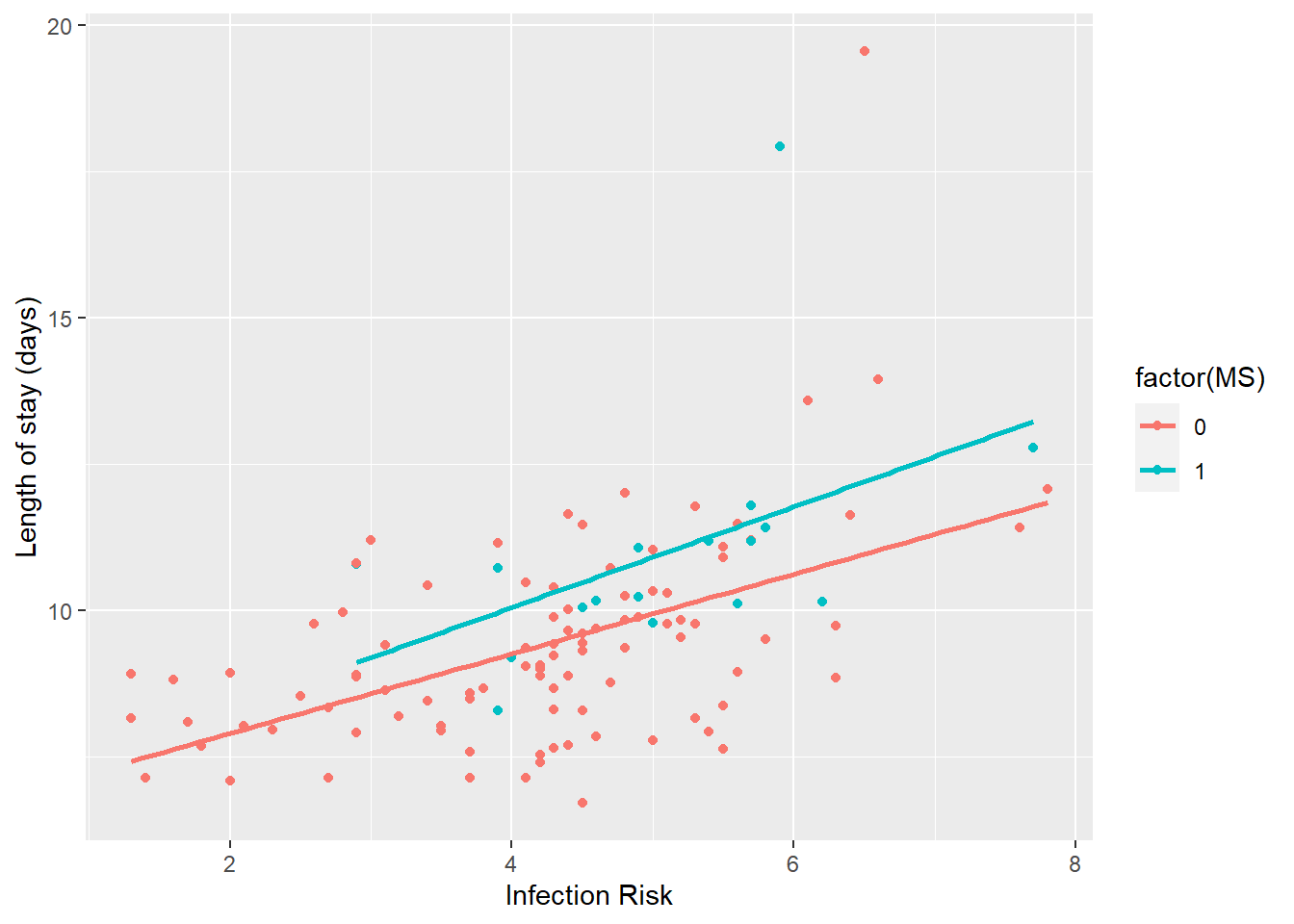

qplot(x = INFRISK, y = LOS, data = data_hosp, color = factor(MS)) +

geom_smooth(method = "lm", se=FALSE) +

labs(x="Infection Risk", y="Length of stay (days)")## `geom_smooth()` using formula 'y ~ x'

# Lines look fairly parallel, in line with the non-sig interaction result.Diagonsis

################################################################

# Biostatistical Methods I #

# Multiple Linear Regression #

# Model Diagnostics #

################################################################

library(dplyr)

library(HH)## Loading required package: lattice##

## Attaching package: 'lattice'## The following object is masked from 'package:faraway':

##

## melanoma## Loading required package: grid## Loading required package: latticeExtra##

## Attaching package: 'latticeExtra'## The following object is masked from 'package:ggplot2':

##

## layer## Loading required package: gridExtra##

## Attaching package: 'gridExtra'## The following object is masked from 'package:dplyr':

##

## combine##

## Attaching package: 'HH'## The following objects are masked from 'package:faraway':

##

## logit, vif## The following object is masked from 'package:purrr':

##

## transpose# Read data Surgical.csv

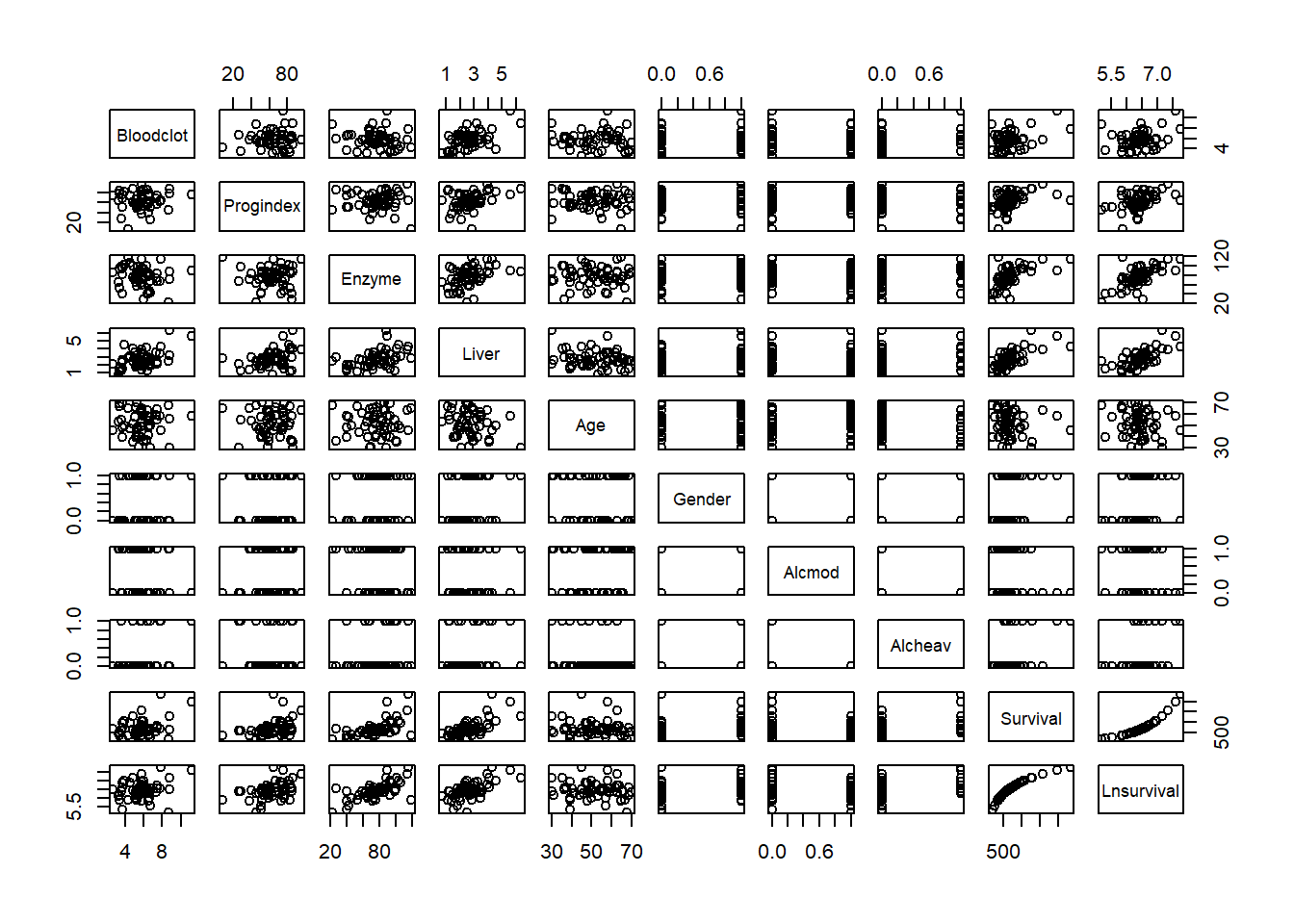

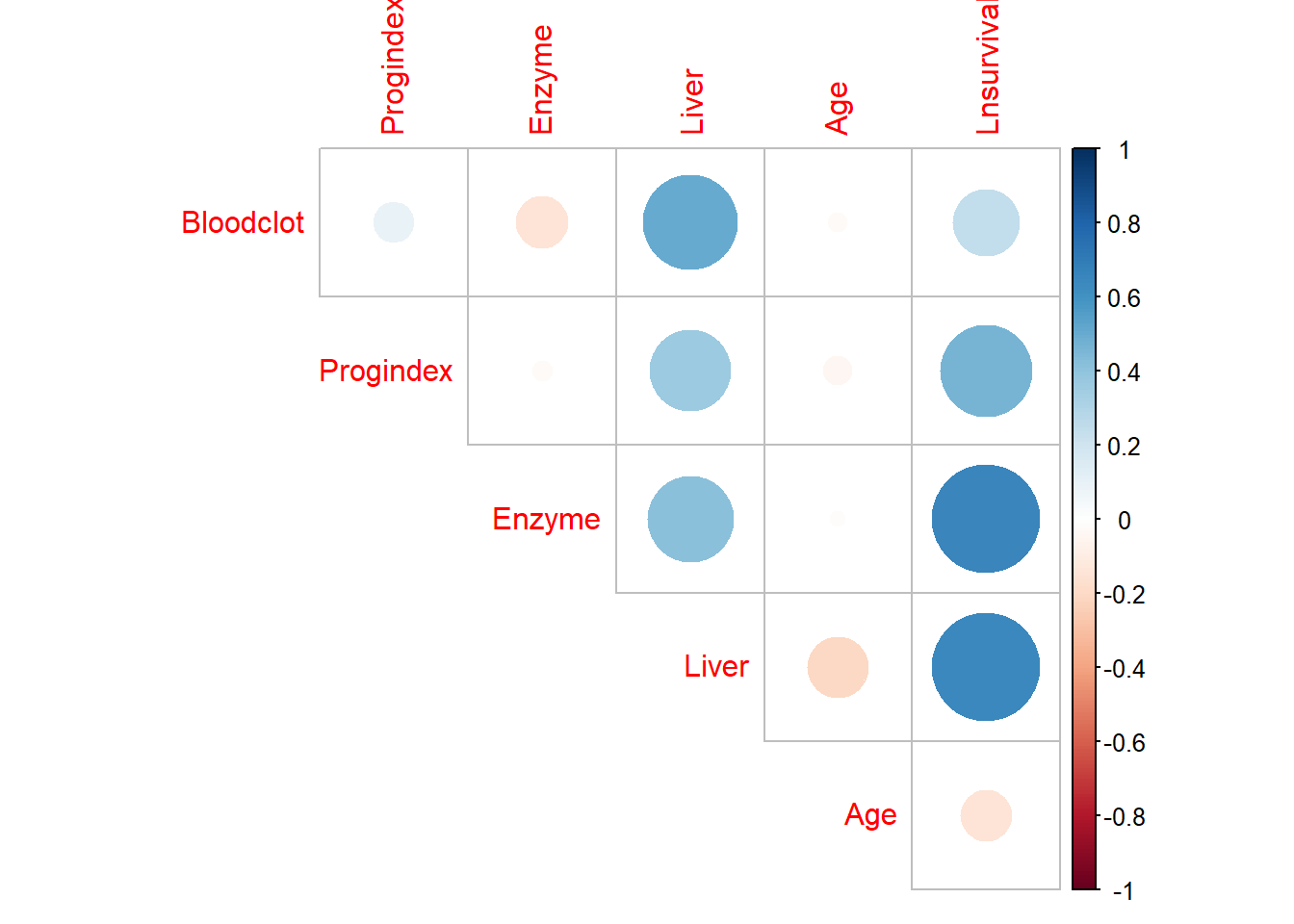

data_surg<-read.csv(here::here("R_Code/R - Module 20/Surgical.csv"))

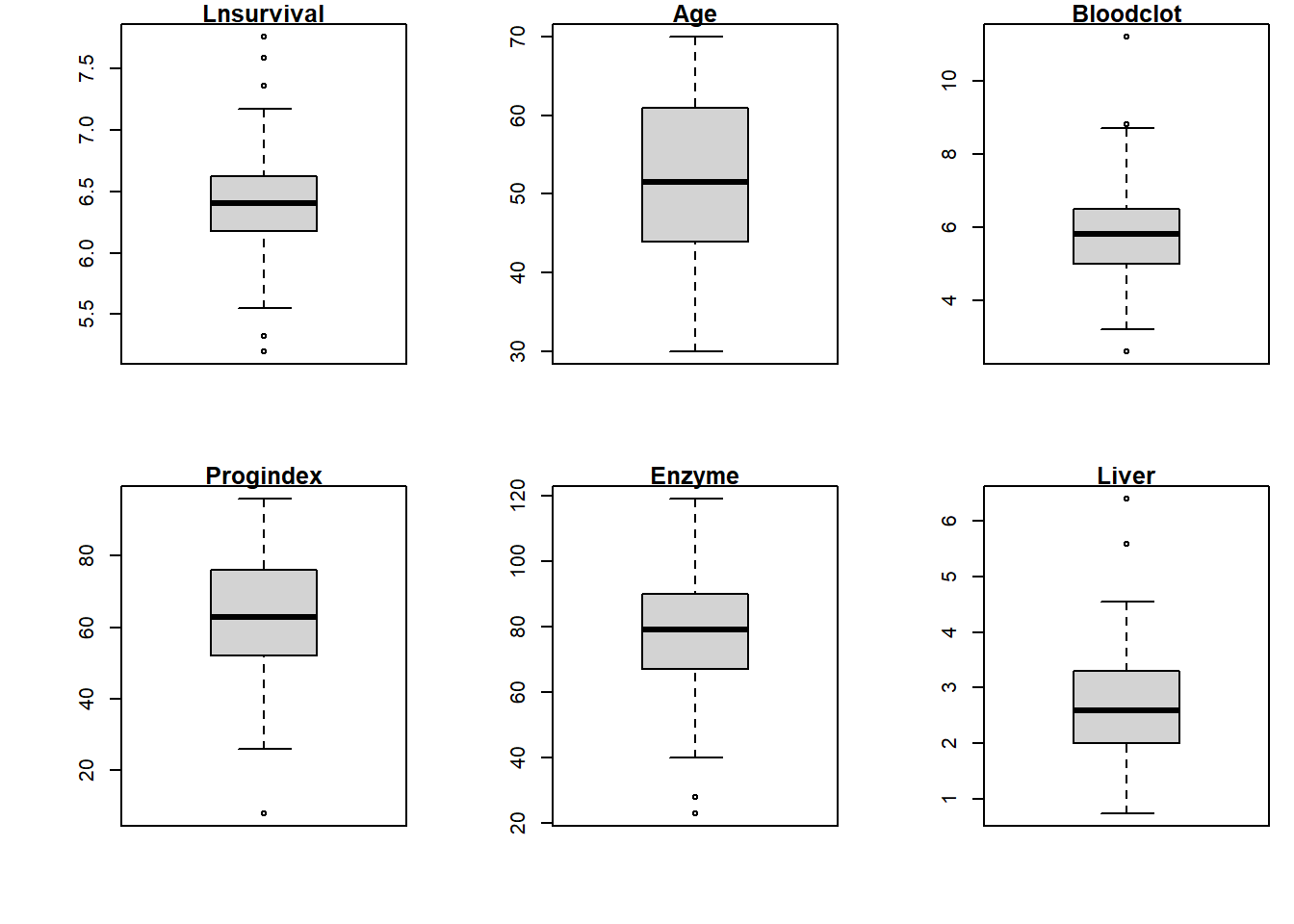

names(data_surg)## [1] "Bloodclot" "Progindex" "Enzyme" "Liver" "Age"

## [6] "Gender" "Alcmod" "Alcheav" "Survival" "Lnsurvival"#attach(data_surg)

# Residuals vs fitted values plot

par(mfrow = c(1, 2))

fit1 <- lm(Survival ~ Bloodclot + Progindex + Enzyme + Liver, data=data_surg)

plot(fitted(fit1), resid(fit1), xlab = "Predicted/Fitted value", ylab = "Residual")

title("(a) Residual Plot for Y (Survival) ")

abline(0, 0)

# MLR with LnSurvival - natural log transformation of "Survival" outcome

fit2 <- lm(Lnsurvival ~ Bloodclot + Progindex + Enzyme + Liver, data=data_surg)

plot(fitted(fit2), resid(fit2), xlab = "Predicted/Fitted value", ylab = "Residual")

title("(b) Residual Plot for lnY (LnSurvival)")

abline(0, 0)

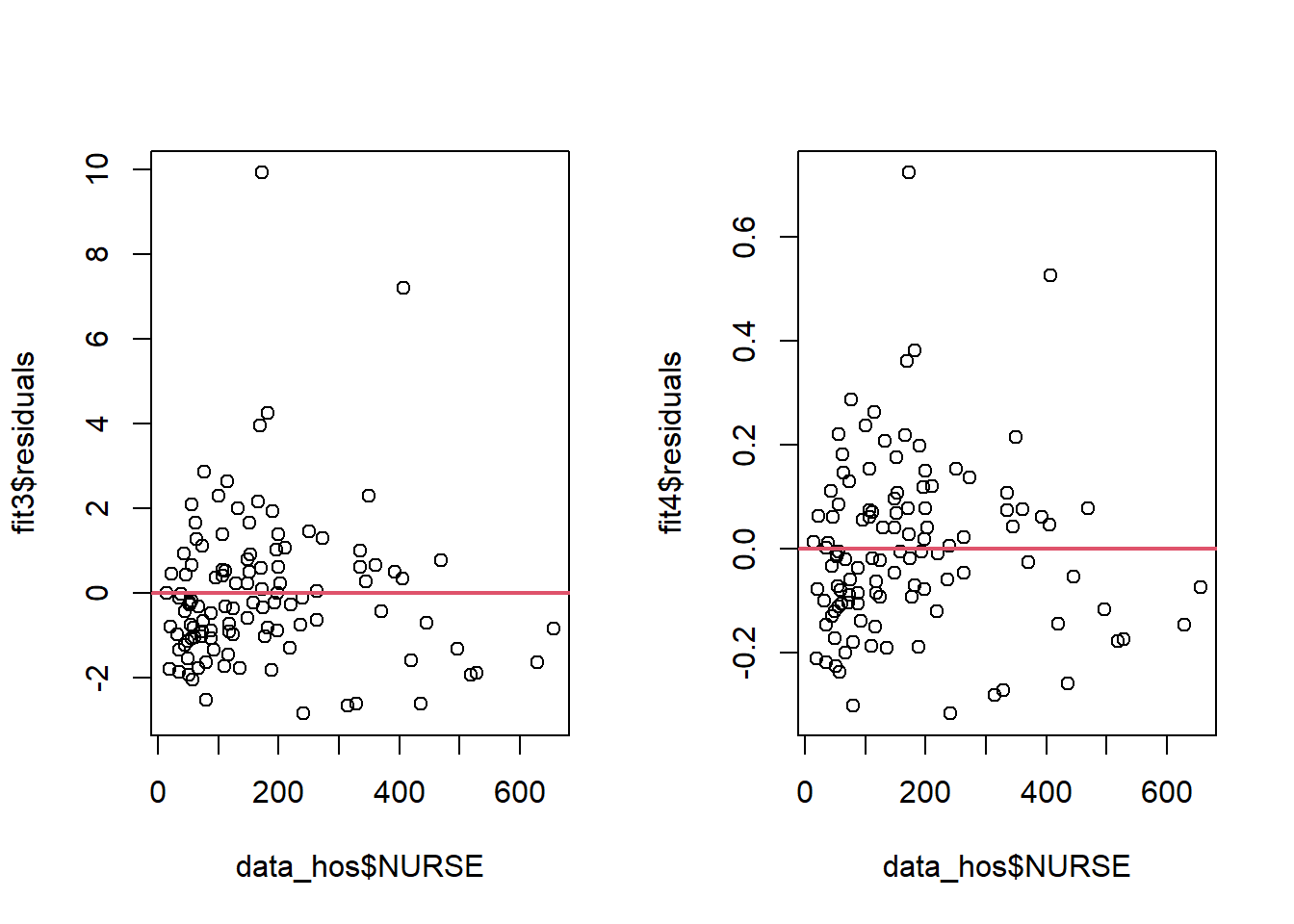

#Residuals vs one covariate: use data Hospital.csv

data_hos<-read.csv(here::here("R_Code/R - Module 17/Hospital.csv"))

fit3<-lm(LOS~NURSE,data=data_hos)

plot(data_hos$NURSE, fit3$residuals)

abline(h=0, lwd=2, col=2)

#Residuals vs one covariate

fit4=lm(log(LOS)~NURSE,data=data_hos)

plot(data_hos$NURSE, fit4$residuals)

abline(h=0, lwd=2, col=2)

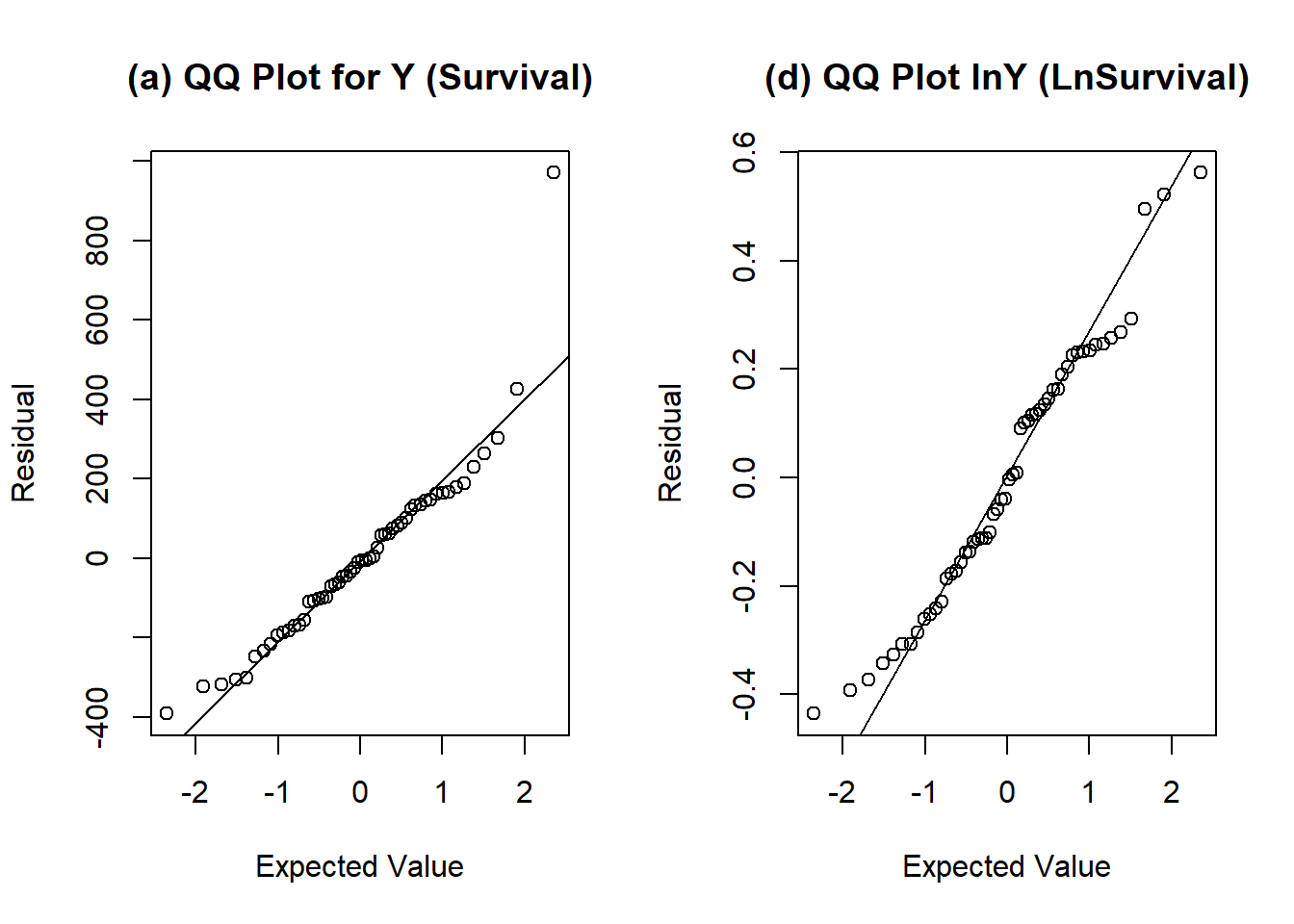

# Quantile-Quantile plot (QQ-plot)

par(mfrow = c(1, 2))

qqnorm(resid(fit1), xlab = "Expected Value", ylab = "Residual", main = "")

qqline(resid(fit1))

title("(a) QQ Plot for Y (Survival)")

qqnorm(resid(fit2), xlab = "Expected Value", ylab = "Residual", main = "")

qqline(resid(fit2))

title("(d) QQ Plot lnY (LnSurvival)")

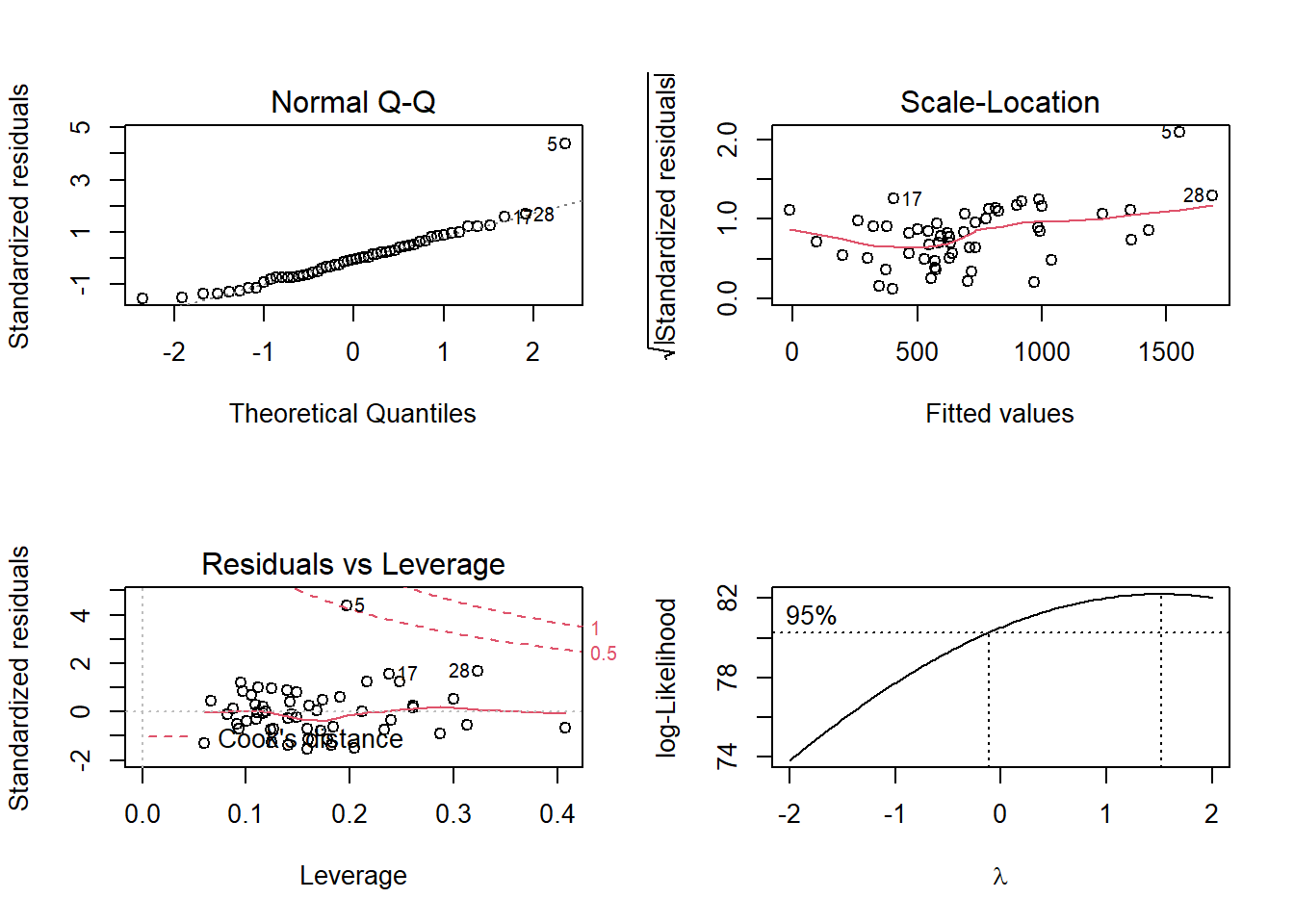

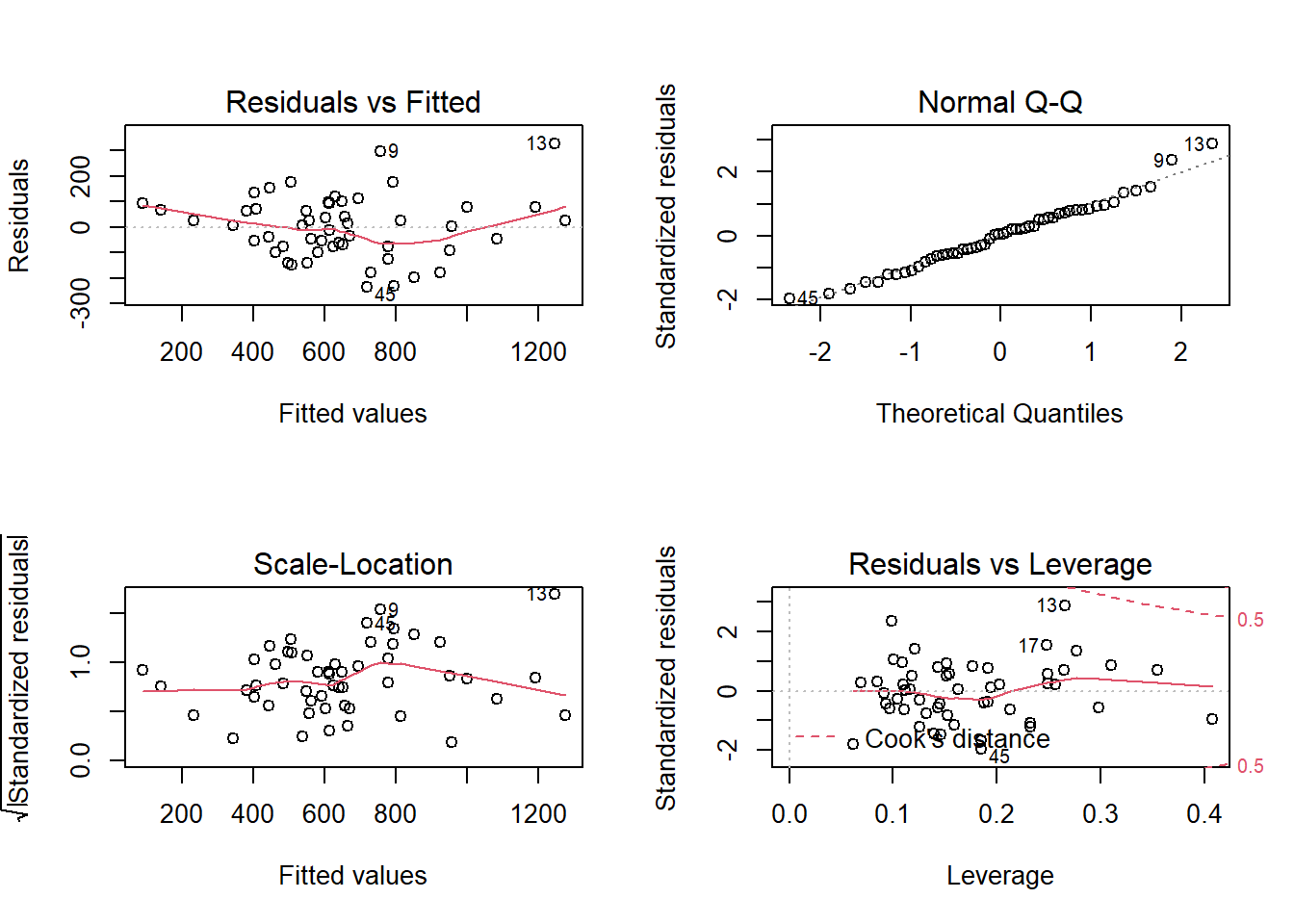

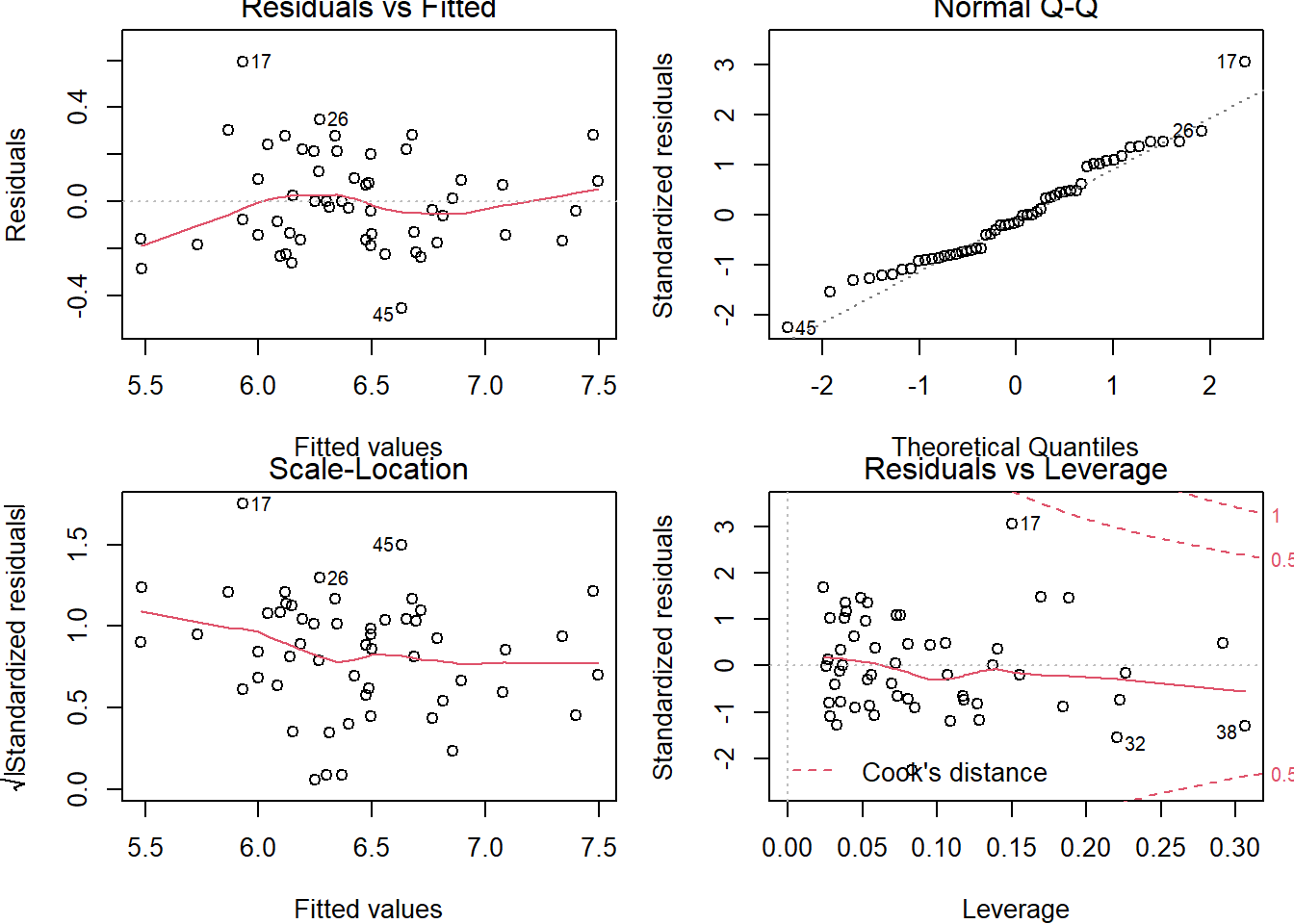

# Obtain all (4) diagnostic plots: EASIER and fast check of the MLR diagnostics

# Plot the regression object

par(mfrow=c(2,2))

plot(fit2)

#################################################################################

# Box-Cox transformation #

#################################################################################

library(MASS)

fit1 <- lm(Survival ~ Bloodclot, data=data_surg)

boxcox(fit1) # default grid of lambdas is -2 to 2 by 0.1

# Could change grid of lambda values

boxcox(fit1, lambda = seq(-3, 3, by=0.25) )

# Box Cox for multiple regression

mult.fit1 <- lm(Survival ~ Bloodclot + Progindex + Enzyme + Liver + Age + Gender + Alcmod + Alcheav, data=data_surg)

summary(mult.fit1)##

## Call:

## lm(formula = Survival ~ Bloodclot + Progindex + Enzyme + Liver +

## Age + Gender + Alcmod + Alcheav, data = data_surg)

##

## Residuals:

## Min 1Q Median 3Q Max

## -285.36 -132.75 -10.00 89.48 790.12

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -1148.823 242.328 -4.741 2.17e-05 ***

## Bloodclot 62.390 24.470 2.550 0.014258 *

## Progindex 8.973 1.874 4.788 1.86e-05 ***

## Enzyme 9.888 1.742 5.677 9.39e-07 ***

## Liver 50.413 44.959 1.121 0.268109

## Age -0.951 2.649 -0.359 0.721231

## Gender 15.874 58.475 0.271 0.787269

## Alcmod 7.713 64.956 0.119 0.906007

## Alcheav 320.697 85.070 3.770 0.000474 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 201.4 on 45 degrees of freedom

## Multiple R-squared: 0.7818, Adjusted R-squared: 0.7431

## F-statistic: 20.16 on 8 and 45 DF, p-value: 1.607e-12boxcox(mult.fit1)

plot(mult.fit1)

mult.fit2 <- lm(Lnsurvival ~ Bloodclot + Progindex + Enzyme + Liver + Age + Gender + Alcmod + Alcheav, data=data_surg)

summary(mult.fit2)##

## Call:

## lm(formula = Lnsurvival ~ Bloodclot + Progindex + Enzyme + Liver +

## Age + Gender + Alcmod + Alcheav, data = data_surg)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.35562 -0.13833 -0.05158 0.14949 0.46472

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 4.050515 0.251756 16.089 < 2e-16 ***

## Bloodclot 0.068512 0.025422 2.695 0.00986 **

## Progindex 0.013452 0.001947 6.909 1.39e-08 ***

## Enzyme 0.014954 0.001809 8.264 1.43e-10 ***

## Liver 0.008016 0.046708 0.172 0.86450

## Age -0.003566 0.002752 -1.296 0.20163

## Gender 0.084208 0.060750 1.386 0.17253

## Alcmod 0.057864 0.067483 0.857 0.39574

## Alcheav 0.388383 0.088380 4.394 6.69e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

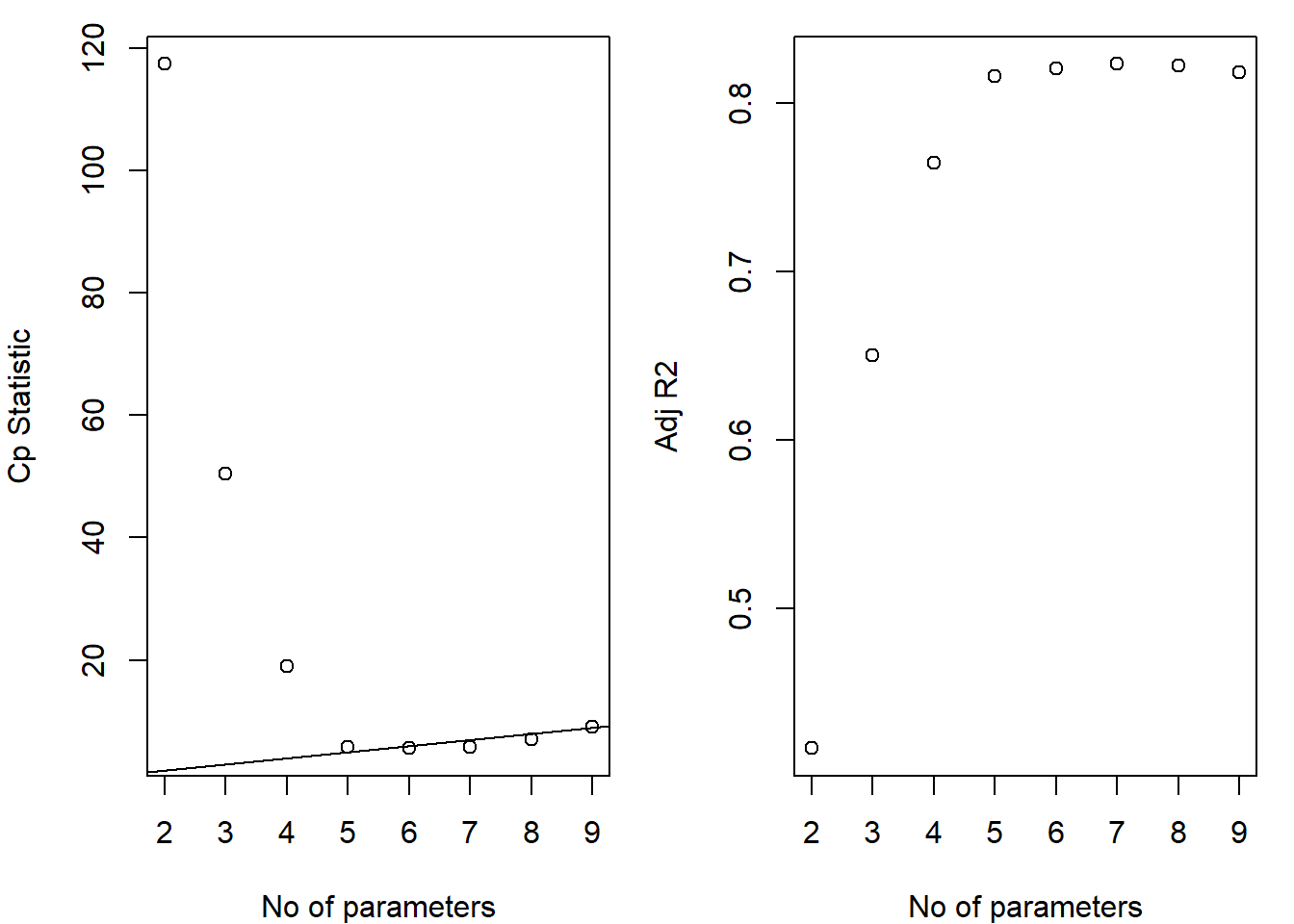

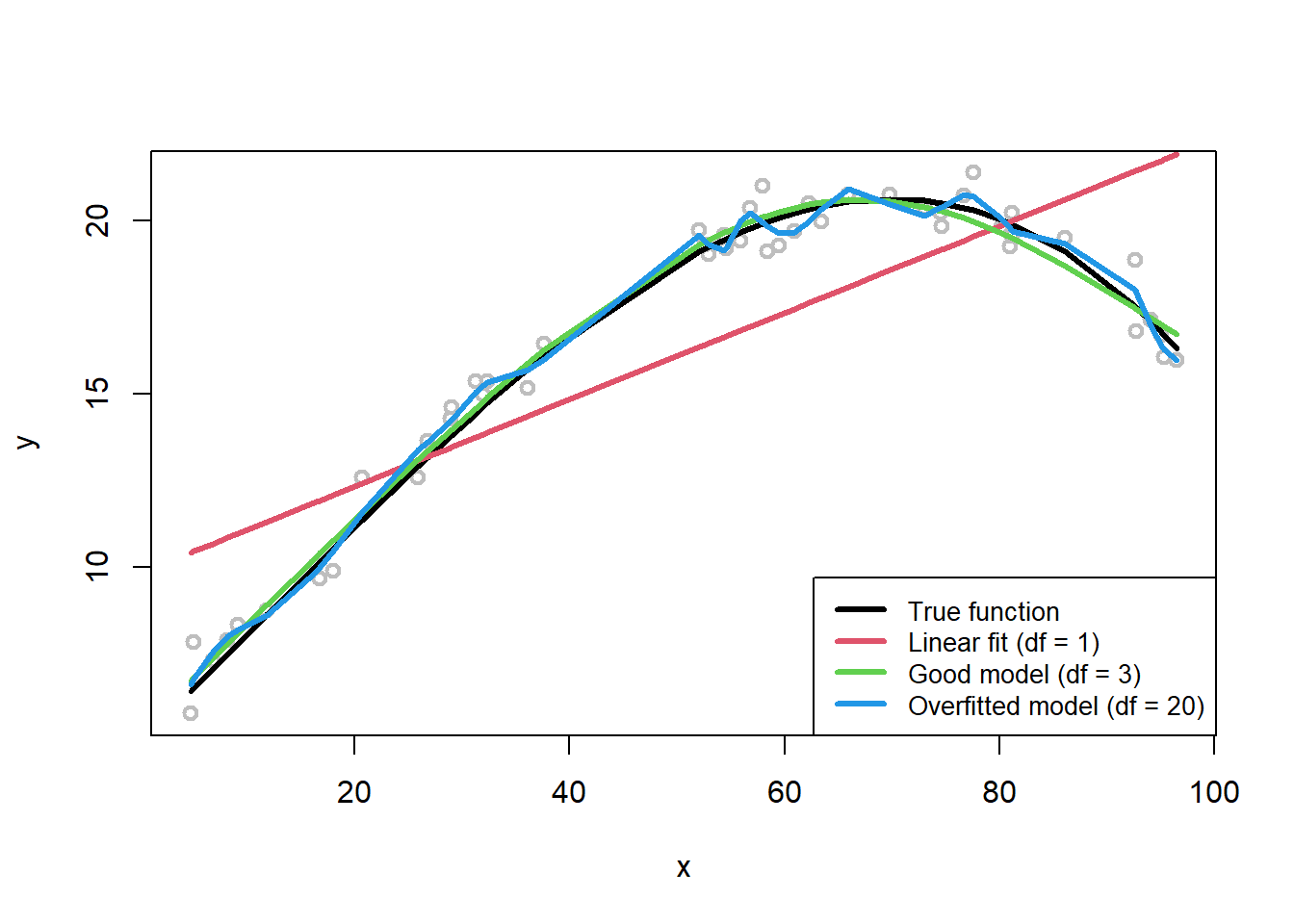

##